Rolling aggregations

This guide provides a comprehensive overview of how to use the update_by table operation for rolling calculations and aggregations. This operation creates a new table containing columns with aggregated calculations (called UpdateByOperations) from a source table. The calculations can be cumulative, windowed by rows (ticks), or windowed by time. They can also be performed on a per-group basis, where groups are defined by one or more key columns.

For a complete list of all available UpdateByOperations that can be performed with update_by, see the reference page.

The use of update_by requires one or more of these calculations, as well as zero or more key columns to define groups. The resultant table contains all columns from the source table, as well as new columns if the output of the UpdateByOperation renames them. If no key columns are given, then the calculations are applied to all rows in the specified columns. If one or more key columns are given, the calculations are applied to each unique group in the key column(s).

What are rolling aggregations?

Rolling aggregations are unique from dedicated and combined aggregations for two reasons.

Preservation of data history

When performing aggregations with dedicated and combined aggregations, the output table contains a single row for each group. In contrast, rolling aggregation tables have the same number of rows as the source table. Thus, they preserve data history, whereas the others do not.

To illustrate this, consider a cumulative sum on a single grouping column with two key values. Notice how result_dedicated and result_combined have two rows - one for each group. In contrast, result_rolling has 20 rows - one for each row in the source table. You can see how the cumulative sum increases over time in the latter case.

Windowing

Dedicated and combined aggregations are all cumulative. They calculate aggregated values over an entire table. In contrast, rolling aggregations can be either cumulative or windowed.

A windowed aggregation is one that only calculates aggregated values over a subset of the table. This subset is defined by a window, which can be specified in terms of rows (ticks) or time. For example, a windowed sum may calculate the aggregated sum over the previous 10 rows in the table. When a new row ticks in, the aggregated value is updated to reflect the new row and the oldest row that is no longer in the window.

To illustrate this, consider the following example, which calculates a cumulative sum and a rolling sum with update_by. The rolling sum is applied over the previous 3 rows, so the SumValue column differs from its cumulative counterpart:

Basic usage examples

The following examples demonstrate the fundamental patterns for using update_by.

A single UpdateByOperation with no grouping columns

The following example calculates the tick-based rolling sum of the X column in the source table. No key columns are provided, so a single group exists that contains all rows of the table.

Multiple UpdateByOperations with no grouping columns

The following example builds on the previous by performing two UpdateByOperations in a single update_by. The cumulative minimum and maximum are calculated, and the range is derived from them.

Multiple UpdateByOperations with a single grouping column

The following example builds on the previous by specifying a grouping column. The grouping column is Letter, which contains alternating letters A and B. As a result, the cumulative minimum, maximum, and range are calculated on a per-letter basis. The result table is split by letter via where to show this.

A single UpdateByOperation applied to multiple columns with multiple grouping columns

The following example builds on the previous by applying a single UpdateByOperation to multiple columns as well as specifying multiple grouping columns. The grouping columns, Letter and Truth, contain alternating letters and random true/false values. Thus, groups are defined by unique combinations of letter and boolean. The result table is split by letter and truth value to show the unique groups.

Applying an UpdateByOperation to all columns

The following example uses forward_fill to fill null values with the most recent previous non-null value. No columns are given to forward_fill, so the forward-fill is applied to all columns in the source table except for the specified key column(s). This means the original columns (e.g., X and Y) are replaced in the result table by new columns of the same name containing the forward-filled values.

Cumulative aggregations

Cumulative aggregations are the simplest operations in the updateby Python module. They are statistics computed over all previous data points in a series. The following cumulative statistics are supported:

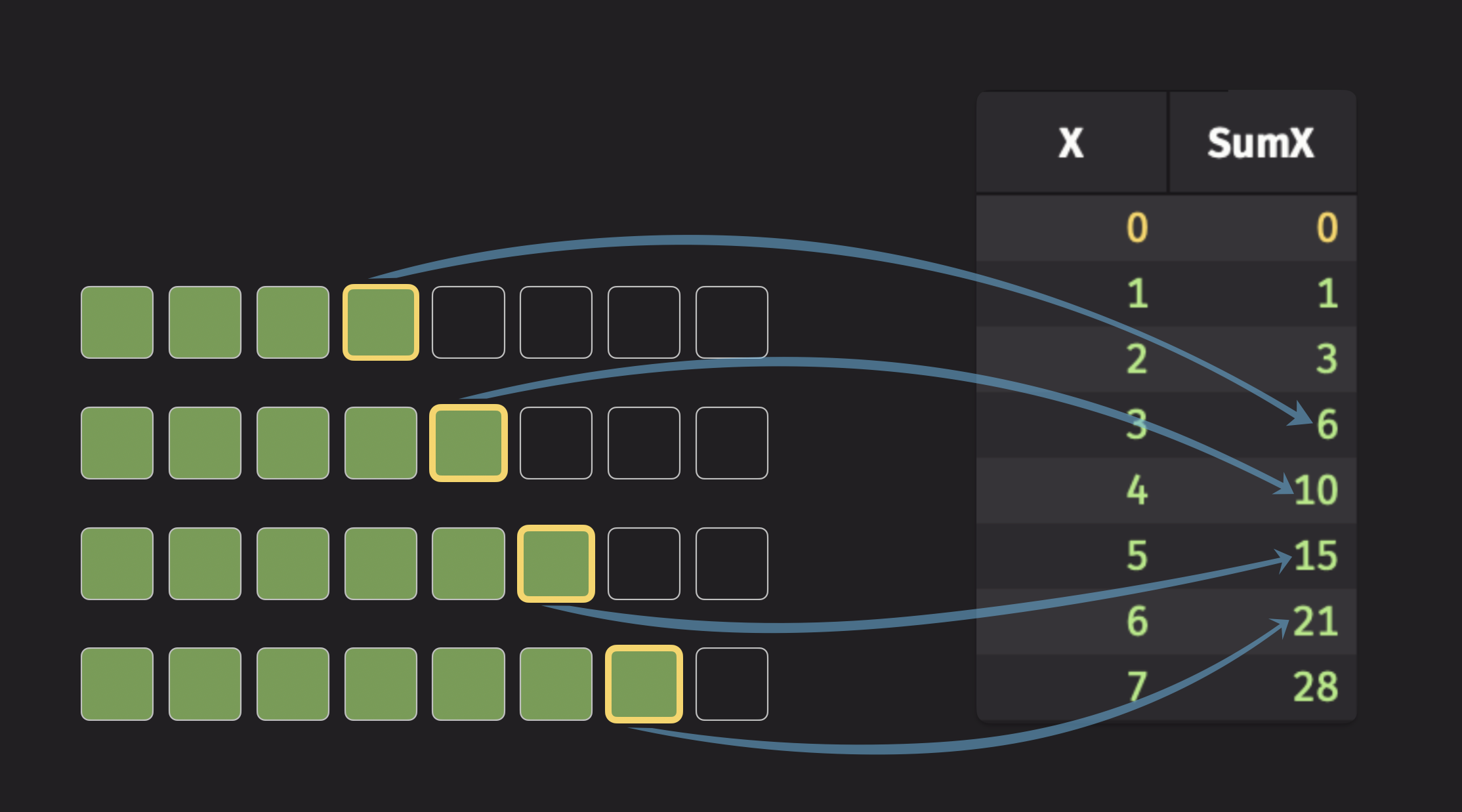

To illustrate a cumulative statistic, consider the cumulative sum. For each row, this operation calculates the sum of all previous values in a column, including the current row's value. The following illustration shows this:

The code for the illustration above looks like this:

Note

The syntax SumX=X indicates that the resultant column from the operation is named SumX.

Windowed aggregations

Windowed aggregations are similar to cumulative aggregations but operate on a finite-sized window of data. This means they only consider a limited number of rows for their calculations. The window size can be defined either by a number of rows (ticks) or by a duration of time.

Tick-based vs time-based windows

Tick-based windows aggregate values over a specified number of rows with fixed window sizes defined by rev_ticks and fwd_ticks parameters:

rev_ticks: The number of previous rows to include in the window. A value ofNincludes the current row and theN-1preceding rows.fwd_ticks: The number of subsequent rows to include in the window.- A negative value for

rev_ticksorfwd_ticksexcludes rows in that direction. For example,fwd_ticks=-1ensures the window does not include any future rows. - Example:

rev_ticks=3, fwd_ticks=0creates a 3-row lookback window (the current row and the two previous rows).

Time-based windows aggregate values over time durations with variable window sizes defined by rev_time and fwd_time parameters:

rev_time: Duration to look back in time (as ISO duration string like"PT5s"or nanoseconds).fwd_time: Duration to look forward in time.- Negative durations exclude time in that direction.

- Require a timestamp column specified by

ts_colparameter. - Example:

rev_time="PT1m", fwd_time="PT0s"creates a 1-minute lookback window.

Tick-based windowed calculations

The following example:

- Creates a static source table with two columns.

- Calculates the rolling sum of

Xgrouped byLetter.- Three rolling sums are calculated using windows that are before, containing, and after the current row.

- Splits the

resulttable by letter viawhereto show how the windowed calculations are performed on a per-group basis.

Time-based windowed calculations

Time-based operations require a timestamp column and use rev_time and fwd_time parameters (as nanoseconds or ISO duration strings like PT10s).

The following example:

- Creates a static source table with three columns.

- Calculates the rolling sum of

Xgrouped byLetter.- Three rolling sums are calculated using windows that are before, containing, and after the current row's timestamp.

- Splits the

resulttable by letter viawhereto show how the windowed calculations are performed on a per-group basis.

Note

In tick-based operations, windows are calculated per-group (each group maintains its own window of rows). In time-based operations, windows are defined by timestamps across the entire table regardless of grouping.

Simple moving (rolling) aggregations

Simple moving (or rolling) aggregations are statistics computed over a finite, moving window of data. These operations weigh each data point in the window equally, regardless of its distance from the current row. The following simple moving statistics are supported:

| Simple moving statistic | Tick-based | Time-based |

|---|---|---|

| Count | rolling_count_tick | rolling_count_time |

| Minimum | rolling_min_tick | rolling_min_time |

| Maximum | rolling_max_tick | rolling_max_time |

| Sum | rolling_sum_tick | rolling_sum_time |

| Product | rolling_prod_tick | rolling_prod_time |

| Average | rolling_avg_tick | rolling_avg_time |

| Weighted Average | rolling_wavg_tick | rolling_wavg_time |

| Standard Deviation | rolling_std_tick | rolling_std_time |

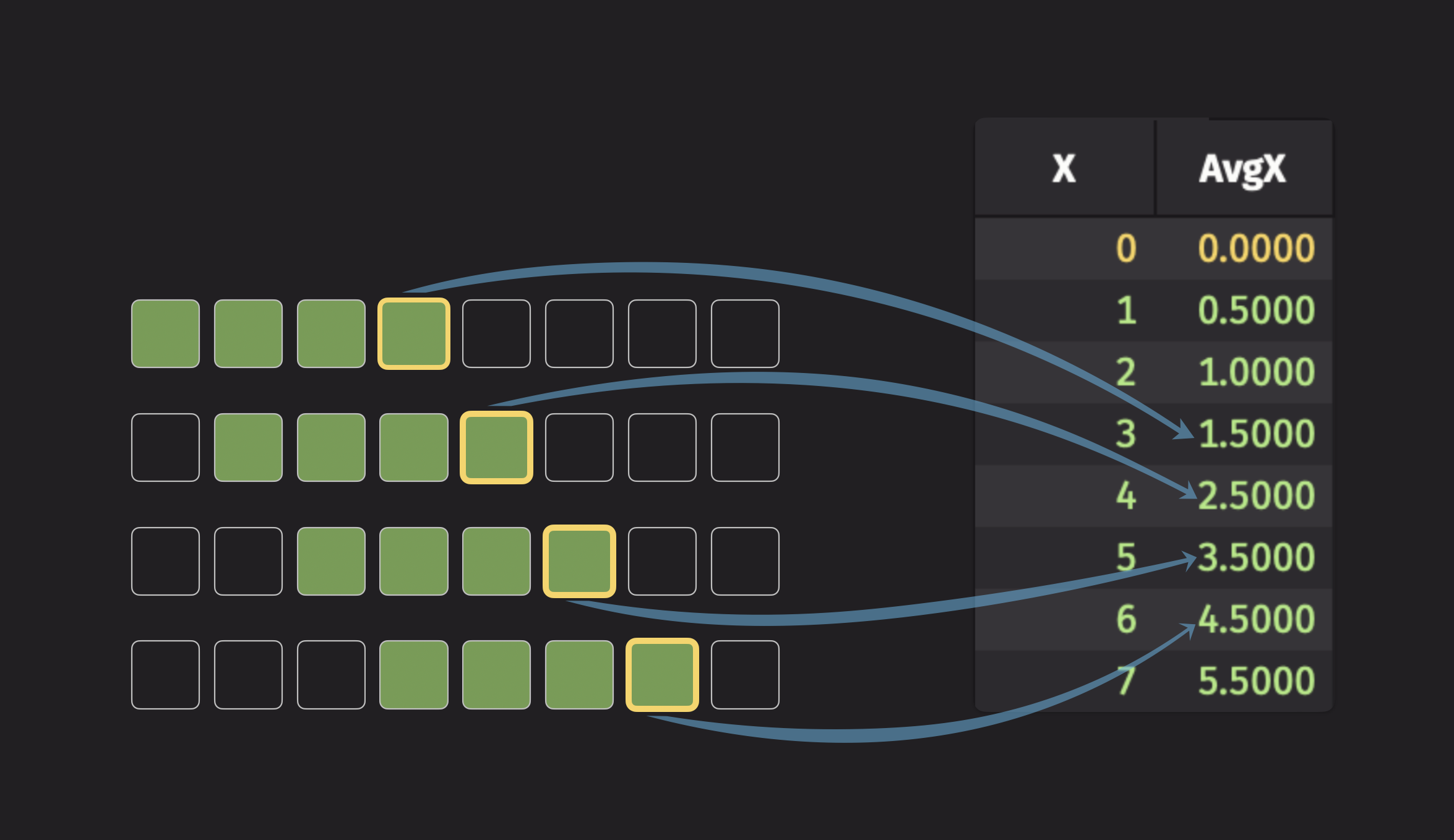

To illustrate a simple moving aggregation, consider the rolling average. For each row, this operation calculates the average of all values within a specified window. The following illustration shows this:

The code for the illustration above looks like this:

The following example demonstrates a series of different tick-based windows that look backwards, forwards, and both. Each example calculates the rolling sum of the Value column:

The above example can be modified to use time-based windows instead of tick-based windows:

Exponential moving aggregations

Exponential moving statistics are another form of moving aggregations. Unlike simple moving aggregations that use a fixed window, these statistics use all preceding data points. However, they assign more weight to recent data and exponentially down-weight older data. This means distant observations have little effect on the result, while closer observations have a greater influence. The rate at which the weight decreases is controlled by a decay parameter. The following exponential moving statistics are supported:

| Exponential moving statistic | Tick-based | Time-based |

|---|---|---|

| Minimum (EMMin) | emmin_tick | emmin_time |

| Maximum (EMMax) | emmax_tick | emmax_time |

| Sum (EMS) | ems_tick | ems_time |

| Average (EMA) | ema_tick | ema_time |

| Standard Deviation (EMStd) | emstd_tick | emstd_time |

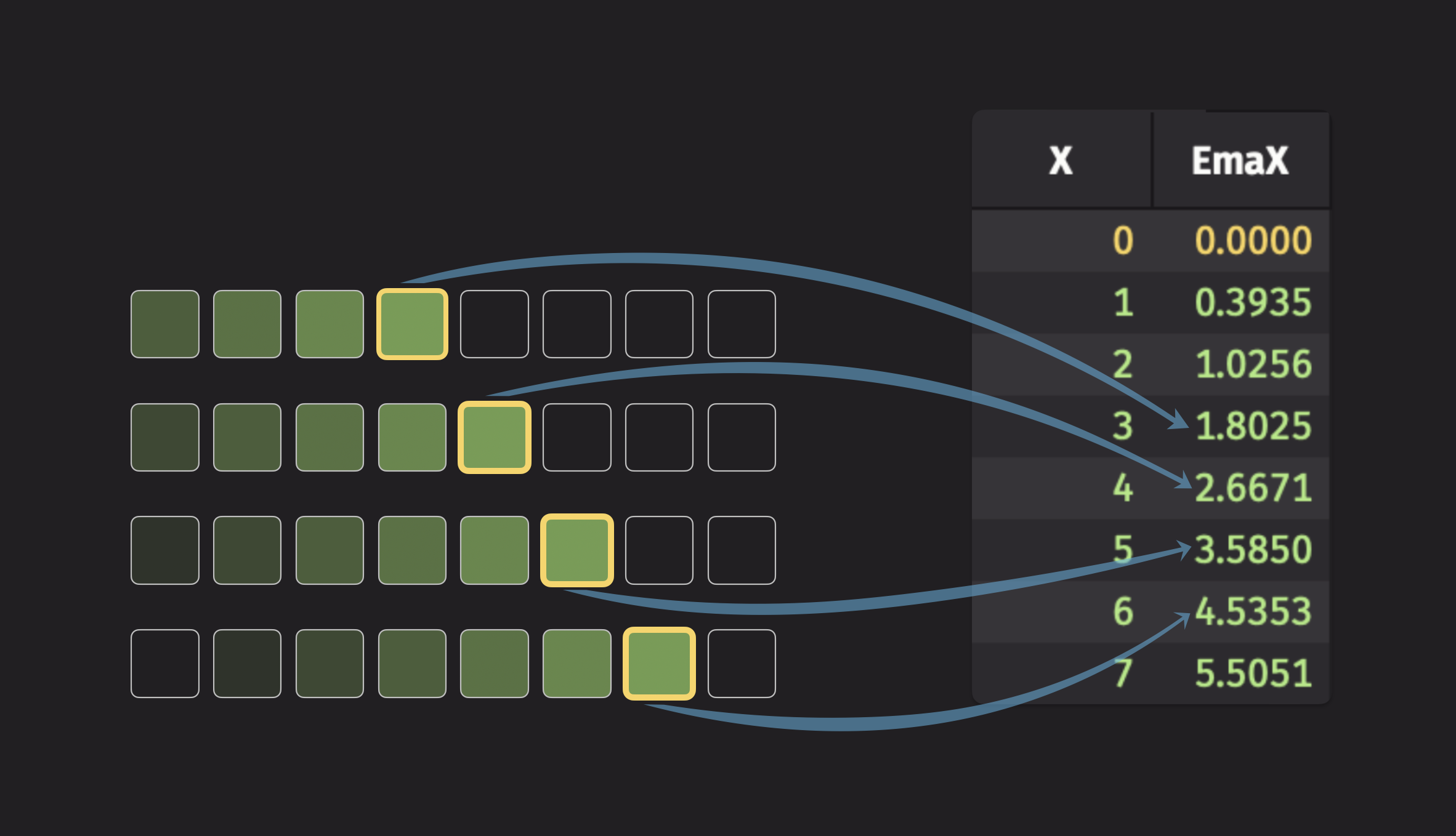

To visualize an exponential moving statistic, consider the exponential moving average (EMA). The following illustration shows this:

The code for the illustration above looks like this:

Note

The syntax EmaX=X indicates that the resultant column from the operation is named EmaX.

The following example demonstrates the effect that different decay rates have on the exponential moving average:

Understanding decay parameters

Tick-based decay (decay_ticks):

- Smaller values = faster decay, more weight on recent data.

decay_ticks=1: Each previous row has ~37% the weight of the current row.decay_ticks=10: Each previous row has ~90% the weight of the current row.- Higher values create smoother, slower-responding averages.

Time-based decay (decay_time):

- Controls how quickly older data loses influence over time.

decay_time="PT1s": Data from 1 second ago has ~37% weight.decay_time="PT10s": Data from 10 seconds ago has ~37% weight.- Longer decay times create more stable, less responsive averages.

The same example can be modified to use time-based windows instead of tick-based windows:

In this time-based example:

decay_time="PT2s": Creates a fast-responding average where data loses ~63% influence after 2 seconds.decay_time="PT4s": Medium responsiveness, data loses ~63% influence after 4 seconds.decay_time="PT6s": Slower response, data loses ~63% influence after 6 seconds.

Additional rolling operations

Deephaven offers two additional rolling operations that are not simple statistics, but rather for grouping or custom formulas:

| Simple moving operation | Tick-based | Time-based |

|---|---|---|

| Grouping | rolling_group_tick | rolling_group_time |

| Custom formula | rolling_formula_tick | rolling_formula_time |

The grouping operations collect values within the rolling window into an array. The custom formula operations allow you to define a custom formula that is applied to the values in the window.

Rolling group operations

The following example demonstrates the grouping operation. It creates a rolling group of the last 4 rows for each group defined by the ID column:

The same example can be modified to use time-based windows instead of tick-based windows:

Note

It is always more performant to use a rolling aggregation than to perform a rolling group and then apply calculations.

Rolling formula operations

The following example demonstrates the custom formula operation. It computes the rolling geometric mean of the X column, grouped by the ID column:

The same can be done with a time-based window:

Handling erroneous data

It's common for tables to contain null, NaN, or other erroneous values. Different update_by operations handle these values in various ways:

Operations with explicit error handling controls

Certain operations can be configured to handle erroneous data through control parameters:

ema_tickandema_time: Useop_controlparameter with OperationControl.delta: Usedelta_controlparameter with DeltaControl.

Default null handling behavior

Most update_by operations have consistent default behavior for null values:

- Cumulative operations (

cum_sum,cum_max, etc.): Skip null values and continue with the last valid result. - Rolling operations (

rolling_avg_tick,rolling_sum_time, etc.): Exclude null values from window calculations. - Exponential operations (

ema_tick,emmax_time, etc.): Skip null values unless configured otherwise viaop_control.

Specialized null handling operations

forward_fill: Specifically designed to replace null values with the most recent non-null value.delta: Configurable null handling throughDeltaControl(see Sequential difference section).

For detailed configuration options, see the OperationControl reference guide.

Additional operations

update_by also supports several other operations that are not strictly rolling aggregations. This section provides a brief overview of these operations with examples.

Sequential difference

The delta operation calculates the difference between a row's value and the previous row's value in a given column. This is useful for calculating the rate of change. The following example demonstrates this:

Sequential differences can be calculated on a per-group basis like all other update_by operations. The following example demonstrates this:

Detrend time-series data

Sequential differencing is a common first measure for detrending time-series data. The delta operation makes this easy:

Null handling

The delta function takes an optional delta_control argument to determine how null values are treated. You must supply a DeltaControl instance to use this argument. The following behaviors are available:

DeltaControl.NULL_DOMINATES: A valid value following a null value returns null.DeltaControl.VALUE_DOMINATES: A valid value following a null value returns the valid value.DeltaControl.ZERO_DOMINATES: A valid value following a null value returns zero.

Forward fill

The forward_fill operation fills in null values with the most recent non-null value. This is useful for filling in missing data points in a time series. The following example demonstrates this:

Choose the right aggregation

Choosing the right aggregation method depends on your specific use case and data requirements. Consider these key questions:

agg_by vs update_by

agg_by and update_by share some distinct similarities and differences. Understanding how the two relate to each other is important for understanding when to use each of them.

agg_by is functionally equivalent to sum_by, avg_by, min_by, etc. When you see sum_by in examples below, know that you could replace it with agg_by(agg.sum_(cols), by=groups) to achieve the same result. Both approaches perform the same aggregation operations.

Similarities

Both operations perform aggregations on a table. For instance, you can calculate a cumulative sum with both. Additionally, both operations also support performing aggregations over groups of rows, such as calculating the average price of a given stock, or the sum of sales for a given product.

Differences

There are two main differences between the two operations:

agg_by output

The output table of a single aggregation or multiple aggregation only contains one row for each group. To illustrate this, consider the following example, which calculates the aggregated sum of the Value column for each ID in the source table:

If the input table is ticking, then the value in each row of the output table changes when the input table ticks.

update_by output

update_by produces an output table that contains the same number of rows as the input table. Each row in the output table contains the aggregated value up to that point for the corresponding group. This means that update_by keeps data history while performing the aggregation. To illustrate this, the previous example is modified to use update_by instead of sum_by:

Note how the result table has the same number of rows as the source table. You can look back at previous values to see what the aggregated value was at any point in the table's history.

Use agg_by (or sum_by, avg_by, etc.) when you want a single aggregated result per group. The output contains one row for each group with the final aggregated value.

Use update_by when you need to see the progression of aggregated values over time. The output preserves all rows from the source table, showing how the aggregation evolves.

Do you need to preserve data history?

- Use

agg_by(orsum_by,avg_by, etc.) when you want a single aggregated result per group. The output contains one row for each group with the final aggregated value. - Use

update_bywhen you need to see the progression of aggregated values over time. The output preserves all rows from the source table, showing how the aggregation evolves.

Capturing data history with traditional aggregations

While update_by naturally preserves data history, it's also possible to capture some data history with traditional single aggregations and multiple aggregations. However, this requires a key column that places rows into buckets, such as intervals of time.

For example, you can create time-based buckets to see how aggregations change over different time periods:

In this example, the traditional aggregation groups data into buckets (using TimeGroup to simulate time intervals), showing how aggregated values change across different groups while providing partial history.

For more information on splitting temporal data into buckets of time, see Downsampling.

What type of aggregation window do you need?

- Cumulative aggregations (

cum_sum,cum_max, etc.): Use when you want to aggregate all data from the beginning up to the current row. - Windowed aggregations: Use when you only want to consider a subset of recent data:

- Tick-based windows (

rolling_sum_tick, etc.): Use when your window should be defined by a fixed number of rows. - Time-based windows (

rolling_sum_time, etc.): Use when your window should be defined by a time duration and you have a timestamp column.

- Tick-based windows (

How should recent vs. distant data be weighted?

- Simple moving aggregations (

rolling_avg_tick,rolling_sum_time, etc.): Use when all data points in the window should be weighted equally. - Exponential moving aggregations (

ema_tick,emmax_time, etc.): Use when recent data should have more influence than older data, with exponential decay over time.

Do you need custom calculations?

- Standard operations: Use built-in functions like

rolling_sum_tick,rolling_avg_time,cum_max, etc. for common statistical calculations. - Custom formulas: Use

rolling_formula_tickorrolling_formula_timewhen you need to apply custom mathematical expressions to windowed data. - Grouping operations: Use

rolling_group_tickorrolling_group_timewhen you need to collect data into arrays for further processing.

Do you need to handle missing or erroneous data?

- Forward fill: Use

forward_fillto replace null values with the most recent non-null value. - Sequential differences: Use

deltato calculate row-to-row changes, useful for detrending time-series data. - Error handling: Some operations like

ema_tickandema_timesupportop_controlparameters for handling null, NaN, or other erroneous values.

Performance considerations

- Rolling aggregations are more performant than rolling groups followed by calculations.

- Tick-based operations maintain separate windows per group, while time-based operations use timestamps across the entire table.

- Exponential moving aggregations use all historical data but weight recent observations more heavily.