consumeToTable

consumeToTable reads a Kafka stream into an in-memory table.

Syntax

Parameters

| Parameter | Type | Description |

|---|---|---|

| kafkaProperties | Properties | Properties to configure the result and also to be passed to create the |

| topic | String | The Kafka topic name. |

| partitionFilter | IntPredicate | A predicate returning true for the partitions to consume. The convenience constant |

| partitionToInitialOffset | IntToLongFunction | A function specifying the desired initial offset for each partition consumed. |

| keySpec | KafkaTools.Consume.KeyOrValueSpec | Conversion specification for Kafka record keys. |

| valueSpec | KafkaTools.Consume.KeyOrValueSpec | Conversion specification for Kafka record values. |

| tableType | KafkaTools.TableType | The expected table type of the resultant table. |

Returns

An in-memory table.

Examples

In the following example, consumeToTable is used to read the Kafka topic test.topic into a Deephaven table. KafkaTools.Consume.FROM_PROPERTIES allows the key and value column types to be inferred by the properties passed in.

- The host and port for the Kafka server to use to bootstrap are specified by

kafkaProps.- The value

redpanda:9092corresponds to the current setup for development testing with Docker images (which uses an instance of redpanda).

- The value

- The topic name is

testTopic.

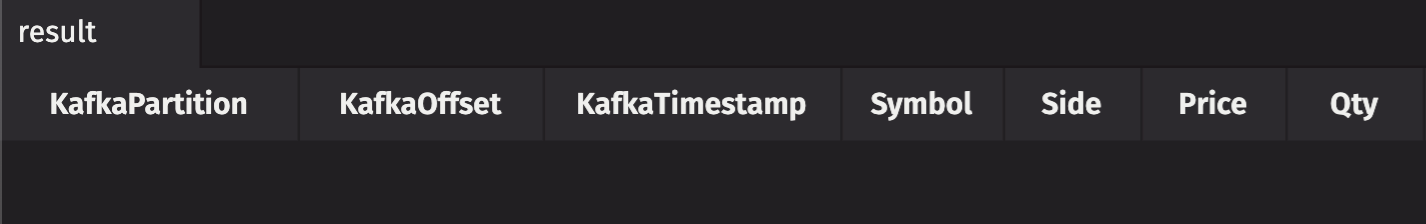

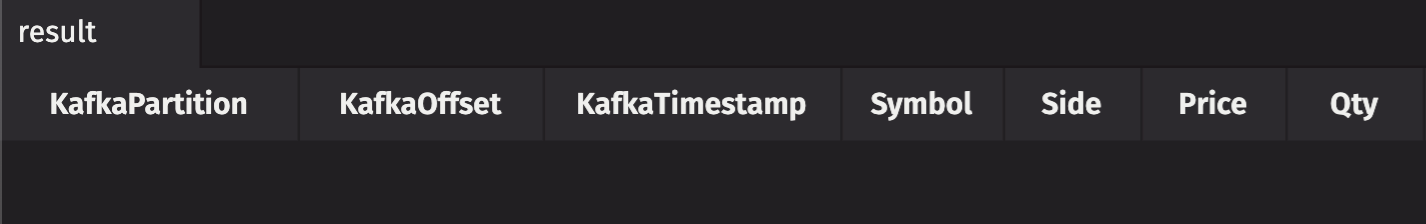

In the following example, consumeToTable is used to read Kafka topic share_price with key and value specifications.

- The key column name is

Symbol, which will beStringtype. - The value column name is

Price, which will bedoubletype.

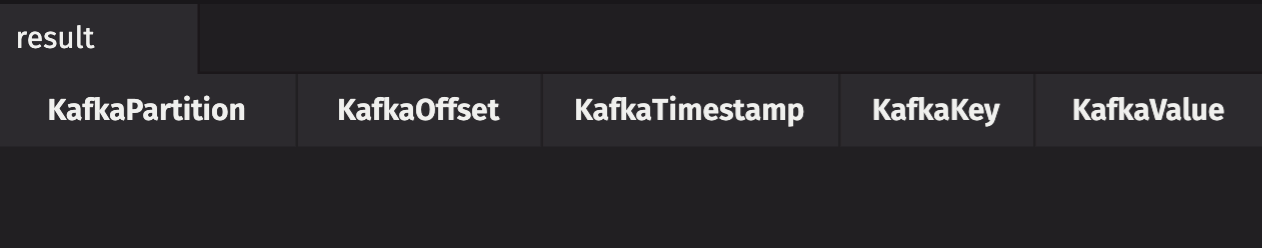

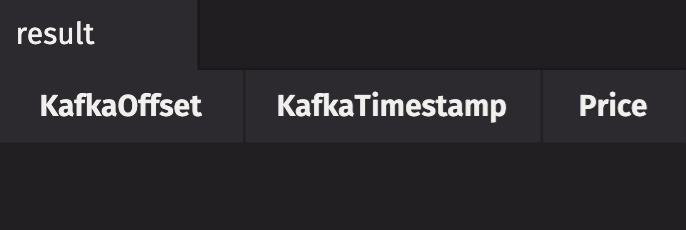

The following example sets the deephaven.partition.column.name as null, which ignores it.

- This results in the table not having a column for the partition field.

- The key specification is also set to

IGNORE, soresultdoes not contain the Kafka key column.

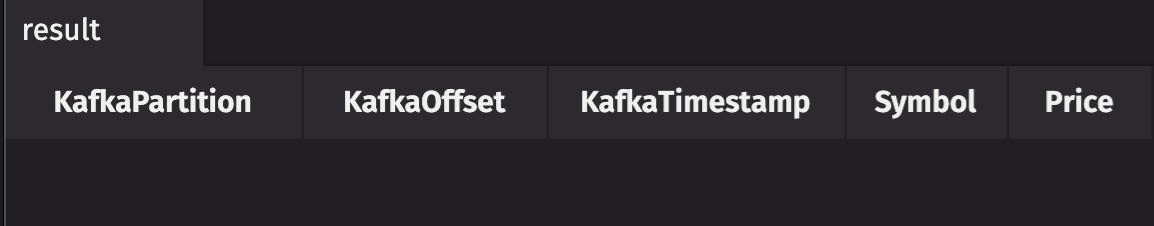

In the following example, consumeToTable reads the Kafka topic share_price in JSON format.

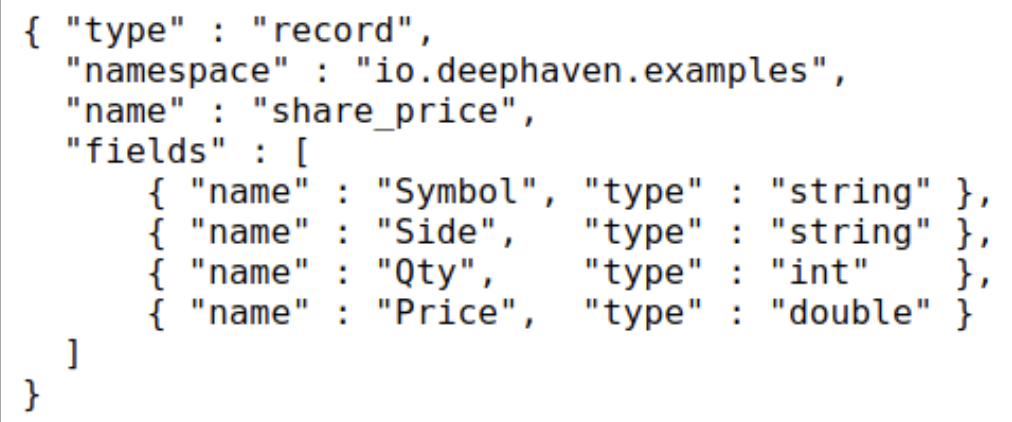

In the following example, consumeToTable reads the Kafka topic share_price in Avro format. The schema name and version are specified.