Data routing YAML format

There are many ways to configure the storage, ingestion, and retrieval of data in Deephaven.

The information governing the locations, servers, and services that determine how data is handled is a collection of YAML files stored in etcd. Data Import Server configurations can be managed and stored as separate configuration items. The rest of the configuration is stored in a single "main" routing file. Like most Deephaven configuration, this routing information is stored in etcd and accessed via the configuration service.

See dhconfig routing and dhconfig dis for information about managing the data routing configuration.

Note

New features sometimes require new syntax. Deephaven strives to continue supporting data routing configuration files in "legacy" format, but you may not be able to use new features until making updates.

However, if you change a section of the YML file to use a new feature, you may have to make additional changes to bring the section into the current file format. See Update data routing configuration syntax for some examples.

YAML file format

The YAML data format is designed to be human-readable. However, some aspects are not obvious to the unfamiliar reader. The following pointers will make the rest of this document easier to understand.

- YAML leans heavily on maps and lists.

- A string followed by a colon (e.g.,

filters:) indicates the name of a name-value pair (the value can be a complex type). This is used in "map" sections. - A dash (e.g.,

- name) indicates an item in a list or array. In this structure, the item is often a map. However, the item can be any type, simple or complex. Maps are populated with key:value sets, and the values can be arbitrary complex types. - Anchors are defined by

&identifier, and they are global to the file. Anchors might refer to complex data. - Aliases refer to existing anchors (e.g.,

*identifier). - Aliased maps can be spliced in (e.g.,

<<: *DIS-default). In the context below, all the items defined by the map with anchorDIS-defaultare duplicated in the map containing the<<:directive.

The Data Routing Service needs certain data to create a system configuration. It looks for that data under defined sections in a document composed of the component YAML files.

Note

See: The full YAML specification can be found at https://yaml.org/spec/1.2/spec.htm.

Data types

Only a few of the possible data types are used by Deephaven and mentioned in this document:

- List (or sequence) - consecutive items beginning with

-. - Map - set of "name: value" pairs.

- Scalars - single values, mainly integer, floating point, boolean, and string.

The YAML parser will guess the data type for a scalar, and it cannot always be correct. The main opportunity for confusion is with strings that can be interpreted as other data types. Any value can be clarified as a string by enclosing it in quotation marks (e.g., "8" puts the number in string format).

From now on, this guide simply refers to scalars as values or their value types (e.g., string).

Note

See: More information about data types can be found at https://yaml.org/spec/1.2/spec.html#id2759963.

Placeholder values

Any value of the form <env:TOKEN> or <prop:property_name> will be replaced by the value of the named environment variable or property. Note that this is not standard YAML, but rather a Deephaven extension to allow for customization in certain environments.

For example:

Sections

The data routing configuration is effectively a YAML document with sections, which we define as important maps and lists directly under the root "config" map.

The document must contain this "config" map, which must have the following sections:

storagelogAggregatorServerstableDataServicesdataImportServers(optional)

dataImportServers is optional in the main file and may be empty. Configuration in this section can now be managed separately with the dhconfig dis command. See also Extract a DIS from the data routing configuration file for help extracting configurations from this section. The content and rules for this section are the same whether a Data Import Server is defined inline or separately.

Optional sections may be included in the YAML file that define anchors and default values (e.g., "anchors"), and those that combine default data into one location (e.g., "default"). Each section is discussed below.

Note

With the introduction of Dynamic Data Routing, the representation of endpoints (host and port) has changed. See Dynamic data routing and endpoint configuration in YAML for detailed information on legacy formats and what is supported.

Anchors

Anchors can be defined and then referenced throughout the data routing configuration file. These can represent strings, numbers, lists, maps, and more. In the example below, we use them to define names for machine roles and port values. In a later section, they define the default set of properties in a DIS.

The syntax &identifer defines an anchor, which allows "identifier" to be referenced elsewhere in the document. This is often useful to concentrate default data in one place to avoid duplication. The syntax *identifier is an alias that refers to an existing anchor. Note: because anchors are global, the names must be unique.

See the example "anchors" section below. This collection of anchors defines the layout of the cluster, defines default values for ports that were previously defined in properties files, and defines default values for DIS configurations that will be referenced later in the document (see Data Import Servers below for a detailed explanation of these values):

Note

Anchors can be defined anywhere in the YAML file. However, the anchors must be defined earlier in the file than where they are used. For example, the hosts and default ports could also be defined in their own sections (e.g., "hosts" and "defaultPorts") rather than consolidated into one section.

Storage

This required section defines locations where Deephaven data will be stored. This includes the default database root and any alternate locations used by additional Data Import Servers.

Note

Many components still get the root location from properties (e.g., OnDiskDatabase.rootDirectory).

For example:

The value of this section must be a list of maps containing:

name: [String]- Other parts of the configuration will refer to a storage instance by this name.dbRoot: [String]- This refers to an existing directory.

Data Import Servers

This section supports Data Import Server (DIS) and Tailer processes.

Note

Deephaven recommends using the dhconfig dis command to manage DIS configurations. This section applies to independently managed Data Import Servers as well as to those embedded in this section of the data routing file.

The DIS process loads the configuration matching its process.name property (e.g., db_dis). The configuration names can be set to match the process names in use. This can be overridden with the property DataImportServer.routingConfigurationName.

The Tailer process uses this configuration section to determine where to send the data it tails. Note that a given table might have multiple DIS destinations.

The two consumers have slightly different needs, since the DIS is also a service provider, while the tailer is only a consumer.

The following example defines two Data Import Servers. Note that the defaults defined in the previous section are imported into each map entry. Defaults defined by anchors and aliases do not work across files and, therefore, do not apply to separately managed DIS configurations.

Note

The value of this section must be a map. The keys of the map will be used as Data Import Server names. The set of valid characters for a DIS name differs slightly for those configured in the routing file versus those configured separately. Avoid special characters for simplicity. The value for each key is also a map:

| Name | Type | Description |

|---|---|---|

endpoint | Map | - This defines all the endpoints (host and ports) supported by the Data Import Server. endpoint attributes include: |

- serviceRegistry (String) - (Optional) Valid values are none and registry. noneis the default value and indicates a static configuration. registry indicates that the configured endpoints will be registered and retrieved at runtime. | ||

-host (String) - This is required if theserviceRegistryvalue isnone. The tailer will connect to this data import server on the given host and tailer port. | ||

- tailerPortDisabled(boolean) - (Optional) If present andtrue, the DIS will not accept tailed data (or commands). | ||

- tableDataPortDisabled(boolean) - (Optional) If present and true, the DIS will not start or register a table data service (TDS). | ||

- tailerPort (int) - This indicates the port for tailing data. -1 implies tailerPortDisabled: true. Required unless tailing is disabled or serviceRegistry = registry. | ||

- tableDataPort (int) - This indicates the port where the Table Data Service will be hosted. -1 implies tableDataPortDisabled: true. Required unless disabled or serviceRegistry = registry. | ||

- tableDataPortEnabled (boolean) - Deprecated. Use tableDataPortDisabled where needed. | ||

- tailerPortEnabled (boolean) - Deprecated. Use tailerPortDisabled where needed. | ||

throttleKbps | int | (Optional) If omitted or set to -1, then there is no throttling. |

storage | String | This must be the name of a storage instance defined in the storage section, or private. This Data Import Server will write data in the location specified by this storage instance. The special value private indicates that the DIS will determine where to store data via some other configuration and that the data will not be readable by any local table data service. When private storage is selected, an in-worker DIS must retrieve the DIS configuration with the com.illumon.iris.db.v2.routing.DataRoutingService.getDataImportServiceConfigWithStorage method instead of the com.illumon.iris.db.v2.routing.DataRoutingService.getDataImportServiceConfig method. |

userIntradayDirectoryName | String | (Optional) Intraday user data will be stored in this folder under the defined storage. If not specified, the default is "Users". |

filters | filter definition | (Optional) This filter determines the tables for which tailers will send data to this Data Import Server. filters is not allowed with claims for a given DIS entry. |

claims | map or list of maps | (Optional) Indicates that specific namespaces or tables are handled exclusively by this DIS, or failoverGroup if one is specified. claims is not allowed with filters for a given DIS entry. |

failoverGroup | String | (Optional) Indicates that this DIS is part of a failover group. The group is defined as all DISes with the same value for failoverGroup. |

tags | list | (Optional) Strings that are used to categorize this Table Data Service. |

description | String | (Optional) Text description of this Table Data Service. This text is displayed in user interface contexts. |

properties | String | (Optional) Map of properties to be applied to this DIS instance. See full details below. |

webServerParameters | map | (Optional) This defines an optional web server for internal status. Properties in this section are: |

enabled (boolean) - (Optional) If set, and true, then a webserver will be created. | ||

port (int) - (Optional) This is required if the serviceRegistry value in endpoints is not registry and enabled is true. The webserver interface will be started on this port. | ||

authenticationRequired (boolean) - (Optional) Defaults to true. | ||

sslRequired (boolean) - (Optional) Defaults to true. If authenticationRequired is true, then sslRequired must also be true. |

Failover groups

A failover group defines multiple DISes that process the same data and can serve as backups to each other. You can create a failover group using the failoverGroup keyword in each member DIS configuration. All DISes that

include the same value for failoverGroup will be treated as equivalent sources for matching data. The Table Data Service (TDS) will request data from only one participating DIS at a time. If the current source becomes unavailable, the TDS will send the request to another. All participating DISes must have identical filters.

You can also define a failover group inline in the sources of a TDS by listing the member names in a list (no longer recommended).

Deephaven recommends explicit configuration using the failoverGroup keyword to define the group and create a TDS with the given name.

Note that this ad-hoc configuration is equivalent in behavior:

However, in this configuration, importer1 and importer2 require special handling when dataImportServers is used in the configuration, so this option is no longer recommended.

DIS properties

The properties value is a map of properties to be applied to this Data Import Server instance.

Valid properties include:

requestTimeoutMillis: intStringCacheHint.<various>

Data Import Servers have string caches, which are configured with properties starting with DataImportServer.StringCacheHint:

DataImportServer.StringCacheHint.tableNameAndColumnNameEquals_DataImportServer.StringCacheHint.columnNameEquals_DataImportServer.StringCacheHint.columnNameContains_DataImportServer.StringCacheHint.tableNameStartsWith_DataImportServer.StringCacheHint.default

These properties define the global default values, but they are augmented and overridden in any given Data Import Server configuration by properties starting with StringCacheHint:

StringCacheHint.tableNameAndColumnNameEquals_StringCacheHint.columnNameEquals_StringCacheHint.columnNameContains_StringCacheHint.tableNameStartsWith_StringCacheHint.default

For example:

Log Aggregator Servers

This section defines the Log Aggregator Servers. Unlike the way a Tailer uses DIS entries, only one LAS will be selected for a given table location. This allows a section with a specific filter to override a following section with more general filters. The LAS process will load the configuration matching its process.name property. That can be overridden with the property LogAggregatorService.routingConfigurationName.

The following example shows one service for user data (rta) and a local service for everything else:

The value for this section must be a list and should be an ordered list. The !!omap directive ensures that the datatype is an ordered list. Consumers of the Log Aggregator Service send data to the first server in the list for which the filter matches.

Each item in the list is a map, where the key is taken to be the name of a Log Aggregator Server.

The value of each item within the map is a key-value pair:

endpoint: [map]- This defines the endpoint (host and port) supported by the Log Aggregator Server that clients would connect to.endpointattributes include:serviceRegistry: [String]- (Optional) Valid values arenoneandregistry.noneis the default value and indicates a static configuration.registryindicates that the configured endpoint will be registered and retrieved at runtime.host: [String]- Required unless serviceRegistry is registry. This is oftenlocalhost.port: [int]- Required unless serviceRegistry is registry.

filters: [filter definition]- (Optional) This filter defines whether data for a given table should be sent to this server.properties:- (Optional) Map of properties to be applied to this Log Aggregator instance. See below.

Warning

The default log aggregator configuration (as illustrated in the example above) has some implications that are not obvious.

Usually, every Deephaven server runs a Log Aggregator Service, configured with the log_aggregator_service entry. Consumers on that machine will target the LAS on localhost.

Logging to user tables requires some ordering guarantees across all sources, and that requires all user data to use the same LAS instance. This data is directed to the LAS configuration rta, which is configured with an endpoint on a specific host. In most configurations, the rta LAS is not a real service. Rather, it is the default LAS (log_aggregator_service configuration) running on the host identified by *ddl_rta.

Unless further configuration changes are made, the two LAS configurations above must use the same port, and cannot use dynamic endpoints (serviceRegistry: registry).

LAS properties

The properties value is a map of properties to be applied to this log aggregator instance.

Valid properties include:

binaryLogTimeZonemessagePool.minBufferSizemessagePool.maxBufferSizemessagePool.bucketSizeBinaryLogQueueSink.pollTimeoutMsBinaryLogQueueSink.idleShutdownTimeoutMsBinaryLogQueueSink.queueSize

See Log Aggregator Service for more information.

Table Data Services

This is the most important section and requires careful set-up.

This section supports both providers and consumers of the Table Data Service (TDS) protocol. Providers include Data Import Servers, the Table Data Cache Proxy (TDCP), and the Local Table Data Service (LTDS). All data import servers are implicitly TDS providers unless that function is disabled. The TDCP and LTDS load their TDS configurations from the TableDataService section by name, using the process.name property, or one of the optional overriding properties:

TableDataCacheProxy.routingConfigurationNameLocalTableDataServer.routingConfigurationName

Any TDS that will be used by a consumer process (query server, merge process, etc.) must have filters configured so that any table location will be provided by exactly one source, either because of filter exclusions or because of local availability of on-disk data.

A table location is composed of a namespace, tableName, internalPartition, and columnPartition. A TDS can be configured to provide specific column partition values (e.g., >= currentDate), and another TDS to provide locations for the other column partition values (e.g., < currentDate).

For example:

Note

It is very important to configure filters here to route data to the correct, unique source. See Claims and filters for some pitfalls to avoid.

The value for this section must be a map. The key of each entry is used as the name of the Table Data Service.

The value of each item is also a map:

| Property | Type | Description |

|---|---|---|

endpoint | [map] | (Optional) This defines the endpoint of a remote table data service. endpoint attributes include: |

- serviceRegistry (String) - (Optional) Valid values are none and registry. none is the default value and indicates a static configuration. registry indicates that the configured endpoint will be registered and retrieved at runtime. | ||

- host (String) - Required unless serviceRegistry is registry. This is often localhost. | ||

- port (int) - Required unless serviceRegistry is registry. | ||

storage | [String] | (Optional) Either storage or sources must be specified. If present, this must be the name of a defined storage instance. This is valid for a local Table Data Service, or a Local Table Data Proxy. |

sources | [list] | (Optional) Either storage or sources must be specified. sources defines a list of other table data services that will be presented as a new table data service. Each source has a name and an optional filters. The filter here applies in addition to any filter defined on the named source. |

name | [String or List] | A string value refers to a configured table data service, DIS, or failover group. A list indicates an ad-hoc failover group (multiple configured table data services that are deemed to be equivalent). |

filters | [filter definition] | (Optional) In a composed table data service, it is essential that data is segmented to be non-overlapping via filters and data layout. A given table location should not be served by multiple table data services in a group. |

tags | [list] | (Optional) Strings that are used to categorize this Table Data Service. |

description | (Optional) | Text description of this Table Data Service. This text is displayed in user interface contexts. |

properties | (Optional) | Map of properties to be applied to this Table Data Service instance. See below. |

Important

The service registering an endpoint must be authenticated. If a table data provider (e.g., TDCP or LTDS) is configured with serviceRegistry: registry, the service must also be configured with an authentication key.

The dataImportServers keyword

dataImportServers can be used as a source name to represent all known Data Import Servers and failover groups.

It is dynamic, and automatically includes any DISes added with dhconfig dis.

You can exclude specific DISes or groups with the except keyword.

Ad-hoc failover groups are not handled and will cause location overlap TableDataExceptions. These DISes are explicitly included in a TDS (that is how they are defined as a group) and they must be excluded from dataImportServers with except.

This is a typical example:

TDS properties

The properties value specifies properties to be applied to this Table Data Service instance.

Valid properties for LocalTableDataService instances include:

tableLocationsRefreshMillistableSizeRefreshMillisrefreshThreadPoolSizeallowLiveUserTables

Note: The following properties apply only to standalone uses of the LocalTableDataService:

LocalTableDataService.tableLocationsRefreshMillisLocalTableDataService.tableSizeRefreshMillis=1000LocalTableDataService.refreshThreadPoolSize

Valid properties for RemoteTableDataService instances include:

requestTimeoutMillis

Tags and descriptions

All Table Data Service configuration sections support tags and descriptions. Data Import Servers are implicitly Table Data Services, and tags and descriptions apply there as well.

This information is used to influence the user interface in several places.

In general:

- The

[description]item provides explanatory text that can be displayed along with the name. - A

[tag]provides one or more labels that can be used to categorize the tagged item.

The default tag identifies an item in a set to be used as the default selection.

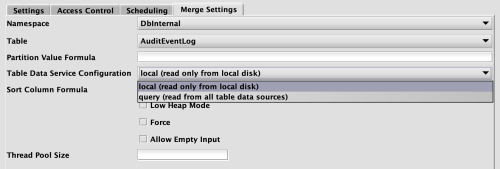

Persistent Query type "Data Merge"

When "Data Merge" is selected as the Configuration Type in the Persistent Query Configuration Editor, the Merge Settings tab becomes available. This tab includes a drop-down list for Table Data Service Configuration.

Items in this list show any configured descriptions. Note that:

- If any Table Data Service configurations have the tag

merge, then only instances with that tag will be included in the drop-down list. - If any Table Data Service configuration has the tag

default, that item will be selected by default for a new query of this type.

For example:

The default selection is local. Because local and query include the tag merge, they appear in the drop-down list below. The default selection is local:

Claims

Claiming a table

Claims provide a method for tailers and data readers to identify the DIS associated with a particular table. Claims simplify data routing configuration, as compared to filters.

Claim a table or namespace for a DIS by adding claims to the configuration section for that DIS. This can be done automatically with dhconfig dis.

The tableName is optional in the claim. If omitted, or if the value is *, then all tables in the namespace are claimed. If given, only that single table is claimed.

Each DIS may claim multiple tables or namespaces. Any number of DISes may claim distinct tables or namespaces. A DIS that uses filters may not make claims and vice versa.

A DIS with claims handles only data for those claims. No other DIS (except in the same failover group) handles data for those claimed tables.

Multiple claims on the same table or namespace are not allowed (but see failover groups below).

Overriding claims

A claim may be made for a single table, or for an entire namespace. A single table claim overrides a namespace claim. All claims in the file are evaluated together, so the order is not meaningful.

In this example, dis_claims_kafka_t1 is the source for Kafka.table1, and dis_claims_kafka is the source for all other tables in the Kafka namespace.

Claims and filters

The notion of tables being claimed by specific DISes is enforced at a global level. This means that the claimed tables do not need additional exclusions in the configuration file to determine tailer targets (to which DISes should this data be sent) and table data services (TDS) (from which DIS or service should this data be requested).

Important

Data Import Servers only supply online data. Claims and related filtering only apply to online data.

Claims have the following implications. Note that a DIS is implicitly also a Table Data Service (TDS) as it both ingests data and serves it to other processes:

Data Import Servers:

- If a claim is made, the implicit DIS filter is "have I claimed this table?"

- If no claims are made, the DIS filter is "is the table unclaimed?" plus any filter specified.

When composing Table Data Services (sources tag):

- An ad-hoc failover group (

[tds1, tds2]) is filtered by any filter given here, plus the filters on the DISes themselves. - A source named

dataImportServerscauses the section to be repeated for all applicable DISes and failover groups. - If a filter is not provided:

- A named failover group is filtered with "is claimed by this group".

- A named DIS is filtered with that DIS's filter, which will be an is-claimed or is-not-claimed filter.

- If a filter is provided, that filter will be used for the named source, in addition to the supplied by the source itself.

Warning

There are two significant errors to avoid.

- Two data sources are not permitted to provide the same table location. This may be more complicated than it appears; to improve efficiency with large data sets, Deephaven does not read all the files under column partition directories. Table locations that might exist are reported for certain operations, and this can count as an overlap. This condition produces a TableDataException.

- If you have table data providers that are not available (common for in-worker import servers that are not running), then any process that requests data from that TDS will time out waiting for data. This can happen for seemingly unrelated tables if the filter configuration routes any request incorrectly to a provider.

Be careful to avoid these errors when providing filters that override claims.

Data filters

Filters specify which services apply to a given data location, or which locations apply to a given service. In the filters section, which may be used within many of the primary sections discussed above, a single filter may be defined, or an array of filters. A location will be accepted if any defined filter accepts it.

There are two ways to specify these filters: using Query Language or Attribute Values. The filter attributes for the two modes are mutually exclusive.

Query language filters

Use filter attributes whereTableKey and whereLocationKey to define table and location keys with the following attributes:

| Attribute | Type | Description |

|---|---|---|

NamespaceSet | String | NamespaceSet is User or System, and divides tables and namespaces between User and System. |

Namespace | String | The table namespace. |

TableName | String | The table name. |

Online | Boolean | Online tables are those that are expected to change or tick. This includes system intraday tables and all user tables. |

Offline | Boolean | Offline tables are those that are expected to be historical and unchanging. This is system data for past dates and all user tables. Online and Offline categories both include user data, so Offline is not the same as !Online. |

InternalPartition | String | The internal partition. |

ColumnPartition | String | whereLocationKey queries apply to the Location Key (InternalPartition and ColumnPartition) associated with a given Table Key. |

If your sequence of filters relies on externally changing values such as the date, then you must provide a consistent view of those values for each routing decision. For the common case of currentDateNy(), you can use the alternative com.illumon.iris.db.v2.locations.CompositeTableDataServiceConsistencyMonitor.consistentDateNy() function. For arbitrary functions, you guard instances with the FunctionConsistencyMonitor defined in com.illumon.iris.db.v2.locations.CompositeTableDataServiceConsistencyMonitor.INSTANCE.

Query language filter examples

The following example filters to System namespaces except LASTBY_NAMESPACE, for the current date and the future:

The next example filters to the same tables, but for all dates before the current date:

Unlike the first two filters, the following example includes all locations for these tables:

Attribute Values filter

This type of filter allows you to stipulate specific values for the named attributes. Since multiple filters can be specified disjunctively, you can build an inclusive filter by specifying the parts you want included in separate filters.

The attributes for a filter are:

| Attribute | Description |

|---|---|

namespaceSet | The namespace set that the table namespace belongs to - either User or System. |

namespace | The table namespace. The value must match an existing namespace or it will throw an error. |

tableName | The table name. The value must match an existing table name or it will throw an error. |

online | true or false. online: false means historical system data or any user data. online: true means intraday system data or any user data. |

class | This specifies a fully qualified class name that can be used to evaluate locations. This class must implement DataRoutingService.LocationFilter or DataRoutingService.TableFilter, or both (which is defined as DataRoutingService.Filter). |

A filter may define zero or more of these fields. A value of "*" is the same as not specifying the attribute. A filter will accept a location if all specified fields match.

Attribute values filter examples

There are several ways to specify filters in the YAML format, as illustrated in the examples below. These examples are in the context of a tableDataService sources entry.

Inline map format:

Map elements on separate lines:

An array of filters. Each filter can be inline or on multiple lines:

An empty filter:

No filter (same as an empty filter):

YAML file validation tool

The Deephaven configuration tool, dhconfig, validates data routing configuration files before importing them. This tests for various common errors in the YAML files, and verifies that they are valid together. Validation is also performed when importing a routing file. See dhconfig dis and dhconfig routing for more details.

Add --verbose to get more detail about any parsing errors.

Example Deephaven data routing configuration file

Note

See Dynamic data routing and endpoint configuration in YAML for a detailed explanation of the endpoints tag.