Each morning, the world waits to see if a 13-year-old pug named Noodle is having a “Bones day” or a “No Bones day.” Over the last few months, Noodle’s owner, Jonathan, has chronicled declarations of Nones/No Bones days via TikTok, which depend on Noodle's reaction after Jonathan picks him up from his dog bed.

If Noodle remains standing when Jonathan pulls away, it is a Bones day. This has come to symbolize a productive day and the permission to take risks. However, if Noodle falls down, it is a No Bones day, when viewers are encouraged to indulge in self-care or enjoy a lazy, relaxing day.

Users across social media have rallied around this concept, eagerly awaiting Noodle's pronouncements, especially if an important event is coming up, like a job interview or even their wedding day. You may be wondering: Is there rhyme or reason to Bones vs. No Bones days?

Right now, there are relatively few data points (by our team’s count, we have less than 40 readings), but there is enough to work with to start building a predictor model that can help guide our days when Noodle hasn’t posted or we have an important date coming up (which ideally would fall on a Bones day).

Today was a 'Bones Day'!

Historical data alone is not enough to predict the future

A simple analysis for patterns would rely solely on Noodle’s readings to date. As of right now, the likelihood of it being a Bones day is the same as a coin flip - pretty evenly split. If you are in desperate need of a break and are waiting for the next No Bones day, knowing that it’s a 50/50 shot doesn’t help you much.

As data lovers at Deephaven, we realized that there are a number of variables that could determine the likelihood of a Bones day or No Bones day, like the weather or the impact of Noodle aging.

If you want to get more precise in your forecasting, integrating more complex data tables helps to parse the factors that impact Bones or No Bones days. These take into account historical data, patterns we already know, etc. In terms of weather, for instance, we have not had a day where snow impacts a Bones/No Bones reading, but we could more accurately predict if the first snow day of the year will fall into either category by taking into account the presence of precipitation, temperature highs and lows, and more.

Because we have historical and real-time weather data, using Deephaven Community Core can help you to utilize all of these datastreams to create a more accurate picture of Bones/No Bones days.

In fact, we believe that you can use historical data of Noodle’s readings so far, an understanding of probability, and real-time data to predict the likelihood that a day will be a Bones or No Bones day.

As we get more data points, a predictor created with our Community Core, available via Github, will become increasingly accurate as we get a sense of what constitutes a Bones or No Bones day.

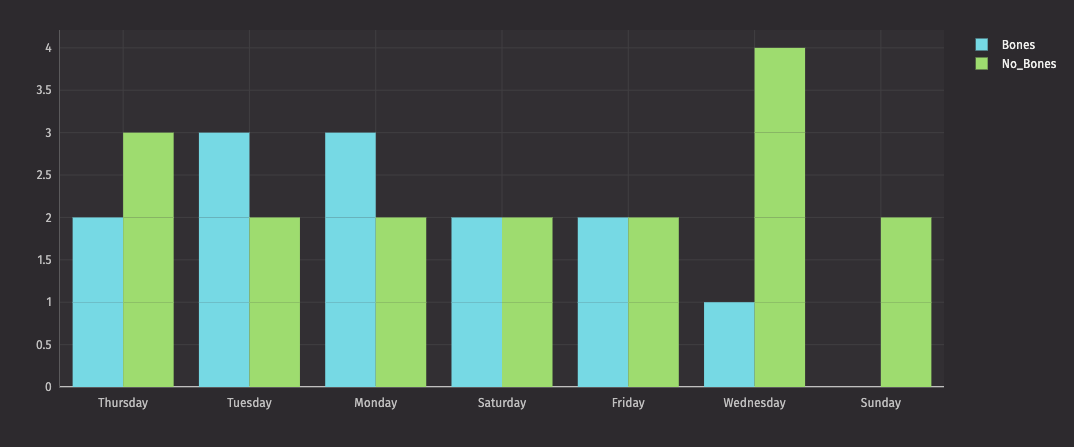

For this data, we look at just the day of the week and the weather. We can see from the number_per_day table that today being a Monday has a 60% chance of being a Bones day. From the number_per_weather table, we see a sunny day like today in NY has a 45% chance of a Bones day. However, Noodle seems to like Mondays a little more when sunny, with past sunny Mondays being Bones days 67% of the time. All in all, we predict today to be a Bones day!

If you create your own predictor, let us know what data you incorporate and how accurate it is on Twitter or LinkedIn. But of course, Noodle would recommend saving this type of project for a Bones day.

Analyzing the impact of Bones/No Bones trend on other aspects of our lives

Taking the analysis a step further, you could look at stock market performance to see if Noodle's predictions have an impact. On Bones days, does the stock market go up? Does a run of No Bones days show a decrease in productivity in our economy?

Building an accurate picture of all of the data can help illuminate the far-reaching implications of something fun, like whether it is a Nones or No Bones day, but also highlights the need to make business decisions on both batch and real-time data.

Code example

- Visit our dedicated GitHub repository on "Bones/No Bones" to get the starter code and example data.

- Fork from the Deephaven Core repository (if you haven't already!)

- Read our Quick Start guide for launching Deephaven with Docker.

- Launch Deephaven by one of the following options...

Launch Deephaven for Python

# Choose your compose file selected above.

compose_file=https://raw.githubusercontent.com/deephaven/deephaven-core/main/containers/python-examples/base/docker-compose.yml

curl -O "${compose_file}"

docker compose pull

docker compose up -d

Launch Deephaven for Groovy

# Choose your compose file selected above.

compose_file=https://raw.githubusercontent.com/deephaven/deephaven-core/main/containers/groovy-examples/docker-compose.yml

curl -O "${compose_file}"

docker compose pull

docker compose up -d

- Navigate to http://localhost:10000/ide/ and use the script below to open some tables in the Deephaven IDE.

from deephaven import read_csv

noodle_pug = read_csv("https://media.githubusercontent.com/media/deephaven/examples/4f15c29972ae216b5bd8077b5e3dc57351eccb27/NoodlePug/noodle_pug.csv")

number_bones = noodle_pug.drop_columns(cols = ["Date", "Day_of_Week", "Weather_NYC"]).sum_by()

number_per_day = noodle_pug.drop_columns(cols = ["Date","Weather_NYC"]).sum_by(by = ["Day_of_Week"])

number_per_weather = noodle_pug.drop_columns(cols = ["Date", "Day_of_Week"]).sum_by(by = ["Weather_NYC"])

number_per_day_weather = noodle_pug.drop_columns(cols = ["Date"]).sum_by(by = ["Day_of_Week", "Weather_NYC"])

Extra credit: try using our Chart Builder feature to spin up some visualizations.

Interested in trying a predictor like Bones/No Bones?

If you want to create your own Bones/No Bones predictor or you'd like to leverage Deephaven for a similar project that incorporates batch data with real-time streams, access the Community project on GitHub here:

Access Deephaven Core Community on GitHubAnd once you've forked the repo, you can set up your local environment in three CLI commands...