If you've ever used Prometheus, you know it's pretty great. It's free, open-source software that uses metric-based monitoring and allows users to set up real-time alerts. Prometheus generates tons of system data, and this data can be pulled from Prometheus through various methods.

Using Prometheus's REST API, it's easy to look at historical data and see trends. Simply choose a time range and at what time intervals to pull the data, then analyze the data and generate metrics, such as maximum values and averages over that period.

But what if you wanted to ingest real-time data from Prometheus, and analyze and make decisions based on this data in real time? That's where Deephaven comes in!

Deephaven's comprehensive set of table operations, such as avg_by and tail, allow you to manipulate and view your data in real time. This means that you can answer questions such as "What's the average value of this Prometheus query over the last 15 seconds?" and "What is the maximum value recorded by Prometheus since we started tracking?" using Deephaven.

This blog post is part of a multi-part series on using Deephaven and Prometheus. Come back later for a follow-up on ingesting Prometheus's alert webhooks into Deephaven!

Real-time data ingestion

Deephaven's DynamicTableWriter class is one option for real-time data ingestion. You can use this class to create and update an append-only, real-time table, as shown in the code below.

PROMETHEUS_QUERIES = ["up", "go_memstats_alloc_bytes"]

column_names = ["DateTime", "PrometheusQuery", "Job", "Instance", "Value"]

column_types = [dht.datetime, dht.string, dht.string, dht.string, dht.double]

table_writer = DynamicTableWriter(

column_names,

column_types

)

result_dynamic = table_writer.getTable()

def thread_func():

while True:

for prometheus_query in PROMETHEUS_QUERIES:

values = make_prometheus_request(prometheus_query, BASE_URL)

for (date_time, job, instance, value) in values:

table_writer.logRow(date_time, prometheus_query, job, instance, value)

time.sleep(2)

thread = threading.Thread(target = thread_func)

thread.start()

So what is this code doing with the DynamicTableWriter class? It starts by defining the column names and column types for the table, and then utilizes the logRow() method to add rows to the table.

The thread_func method contains a loop that pulls data from Prometheus via the helper method make_prometheus_request, and writes this data to the table. This allows you to have a steady stream of data flowing into your table!

Now you can use Deephaven's table operations to analyze the real-time data.

result_dynamic_update = result_dynamic.group_by(by = ["PrometheusQuery"])

result_dynamic_average = result_dynamic.drop_columns(cols = ["DateTime", "Job", "Instance"]).avg_by(by = ["PrometheusQuery"])

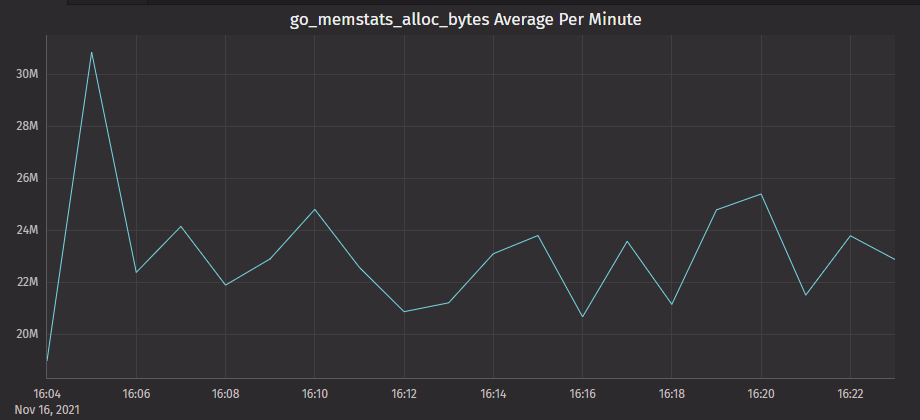

These new tables result_dynamic_update and result_dynamic_average update in real time as more data comes in, meaning we have tables that contain real-time Prometheus data in Deephaven! Deephaven supports many operations to group and aggregate data, allowing you to further analyze your data with what Deephaven has to offer.

Sample app

The example above is only a small illustration of what Deephaven can do with real-time data. If you're interested in seeing an example of real-time data ingestion into Deephaven using Prometheus's data, check out the Prometheus metrics sample app! This app demonstrates both real-time data ingestion and an equivalent example of ingesting static data. You can run this app to see the power of Deephaven's real-time data engine, and how real-time monitoring of data improves upon static data ingestion.

This project is available to be run by anyone, so feel free to run this locally and modify the table operations to see different things you can accomplish using Deephaven!

A video demonstration can be found on our YouTube channel.