Crypto data is everywhere. As of March 2022, there are over 18,000 cryptocurrencies in existence. Like many other data sources, crypto data is typically stored in CSV format. Unfortunately, this format is outdated and memory inefficient. Enter Parquet, the lesser-known data storage format that eliminates the need for bloated CSV files. Use Parquet over CSV and have more space for more data, which means more crypto at your fingertips.

Everywhere I look online, whether it's Kaggle, Nasdaq Data Link, or another publicly available source of historical crypto data, everything is presented in CSV format. That's nice for previewing the data, but I don't care much about that. Previews show a few rows of data and column names, and that's usually it. Why do these places not give me the option to download the data in Parquet?

I don't have the answer, but it feels like an oversight - Parquet files are much smaller, so they take less time to download. For now, I'll just make do with what's available. With Deephaven, I can turn those bloated CSV files to Parquet with ease.

Get the data you want

I want data, and I want to lose the CSV bloat. I'll grab a CSV file from the internet and load the data into a Deephaven table.

Write the data to a CSV file

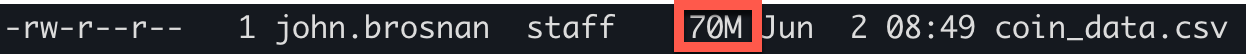

Let's start by writing the data to a CSV file and see how much memory that requires.

70 megabytes for a single file seems like a bit much. Let's see just how much space I can save by using Parquet.

Write the data to a Parquet file

Let's see how much space we can save by writing this data to a Parquet file instead.

This file now takes up 10 MB of space.

I could store 7 identical copies of this Parquet file and take up the same amount of space in memory as a single CSV file. This leaves me with one last thing to do...

Get that bloated CSV file out of here! Speaking of which, I think I'm overdue to convert all of my locally stored CSV files to Parquet.

Store more data

That's really all there is to it. Do you have big, ugly CSV files you use to store your data? Turn them into Parquet in four lines of code. After that, remove the CSV and never look back. Use all of that extra space to store more crypto data than you thought possible.