Facial recognition technology is a powerful tool - computer vision can identify a person simply by scanning their face. You've encountered this whenever your phone recognizes friends to help you organize your photos, or if you need a face scan to enter your top secret laboratory. More seriously, facial recognition technology serves important purposes for law enforcement and surveillance experts. We'll leave that to the professionals. In this blog, I implement facial recognition in real time on the movie Avengers: Endgame, using Deephaven's powerful real-time analytic tools. We'll show you how to easily ingest the data into a platform where you can instantly manipulate, analyze, and learn from that data.

Here's how we did it, in three easy steps:

- Set up a Kafka stream. We like Redpanda for this.

- Create Kafka topics.

- Run the script.

This is a fun example, but the workflow can be applied to many other computer vision cases. I'll walk you through the process of setting up the facial recognition mechanism as well as importing image stream data into Deephaven through Redpanda. Stay tuned for other articles detailing the steps of analyzing image data in Deephaven IDE.

Recognizing the Avengers

In this demo, I face the webcam to the movie Avengers Endgame. We can see that as the movie plays, all the characters are identifed by the model and marked onscreen. (Yes, pointing Python at the movie file instead of pointing a webcam at the movie would work, but in this example we're mimicing real-time events - you could point a webcam at everyone entering an office building, for example, and stream the data as it comes in.)

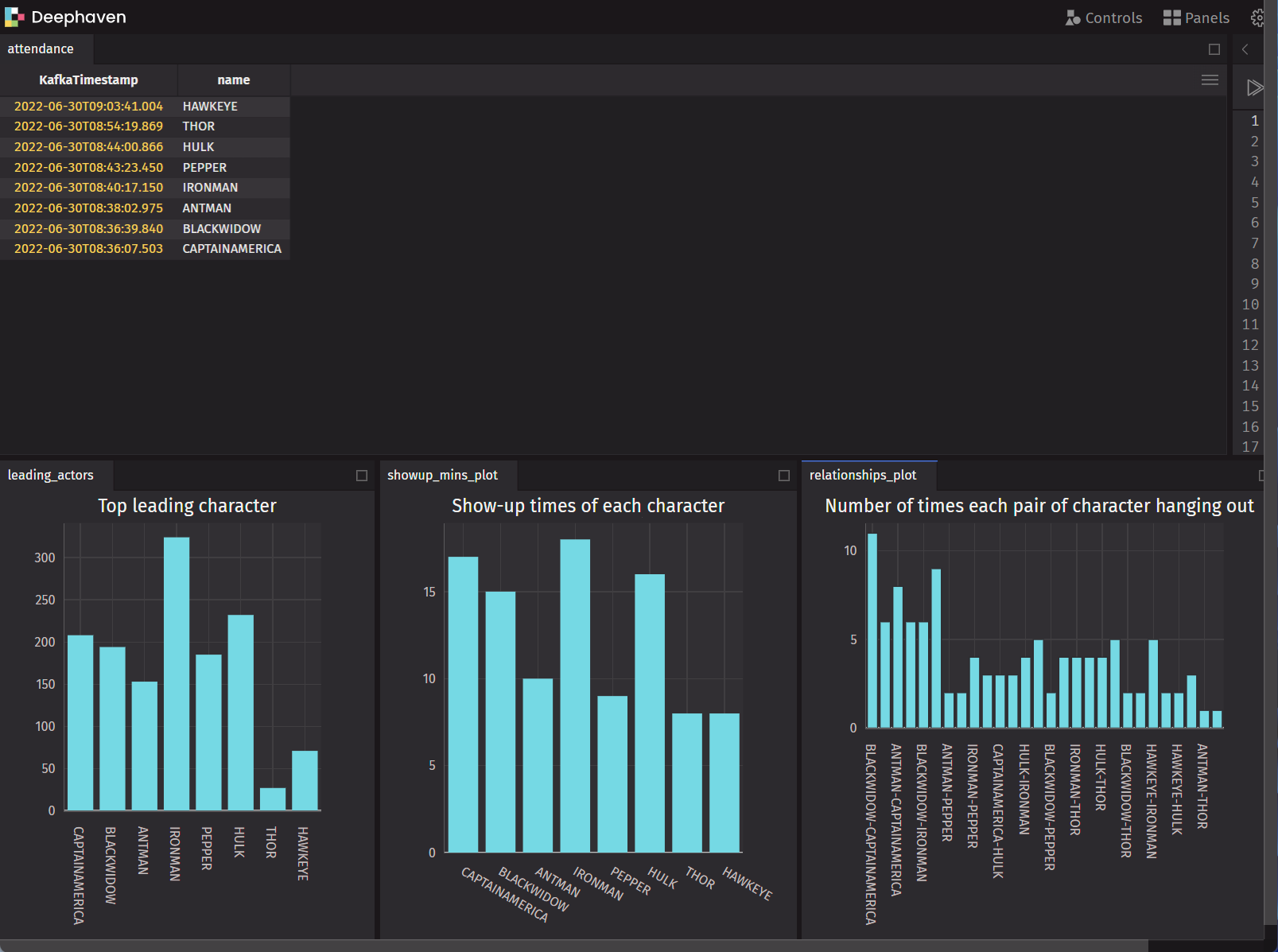

In my Deephaven session, on the top left, a streaming table records the attendance of the first appearance of all characters. On the bottom left, there are three graphs:

Top leading charactercompares the total appearance times of all the main characters.Show-up times of each charactershows the total appearance time (in minutes) for each character.Number of times each pair of characters hanging outtracks which characters appear together more often than the others. Here, I'm basically tracking relationships between characters.

Below, I give the general details about how I accomplished this, with pointers for you to customize the code for your own purposes. You can also find my scripts in the deephaven-examples GitHub repository.

Computer vision and facial recognition

Now I'll walk you through the steps of setting up a program that detects characters from the movie, then outputs their names as well as the time they appear on the screen.

In order to train the face recognition model, we need to build a face database that contains the faces/images of all the characters we want to identify. There are so many ways to do it - here, we simply grab images of all characters from the internet.

Once the image database is prepared, we can use face_recognition and Open_CV packages to develop the algorithm.

The sample code is shown below:

facesCurFrame = face_recognition.face_locations(imgS) runs after the images are captured by webcam. It implements a well-trained HOG algorithm that measures gradient orientation in localized portions of an image to detect the shape of a face.

The next step is to encode each face detected from images and translate it into a language that computers can read. We used a deep learning model to generate 128 unique measurements for every face. face_recognition.face_encodings() does all the heavy lifting.

Once the image goes through the processing, we need to find the most similar one in our database. We use Cosine distance to calculate the distances between faces from a webcam (or movie!) with faces in our database, then output the name of the face with the lowest distance. By the end, we should have a data stream of both the time of the first appearance and names of the characters.

Set up a Kafka stream using Redpanda

We need to store the real-time data so that it can be accessed easily, and we need our tables to auto-refresh as new data comes in. Kafka meets all our needs. The Kafka stream works like a bridge for us to produce and consume real-time data. Kafka can store different data into separated topics; we only use two for the movie data, but this becomes much more useful when you require several. With topic names and key values, we can easily publish and consume the data in real time. Redpanda, a server built upon Kafka, combined with Deephaven is a powerful tool. (To learn more, check out our full how-to guide.)

To start the server, run this code:

This builds the container for Redpanda and Deephaven. To access the stream and experience all of Deephaven's analytic tools, navigate to http://localhost:10000/ide.

Create topics

After the server is up, we need to create topics for data to be produced and consumed. Anything will work, but we've chosen "character_attendance" and "character_relation". Run:

To check the existing topics, run:

Run the face recognition script

Now that you know how the facial recognition model works, here is the full script to try on your own.

To run the script, first install Kafka-python, face_recognition, and opencv-pyth on the local machine with a simple pip install:

Then:

producer=KafkaProducer(bootstrap_servers=["localhost:9092"],value_serializer=json_serializer helps to build a connection with the local server, so that the data can be stored locally and accessed by Redpanda later on.

producer.send(topic_name, json_dic) sends the data to the server after data stream gets generated.

Try it out

If you use this project and blog post as a baseline for working with Deephaven, we'd love to hear about it. Let us know what you come up with in our Github Discussions, or our Slack community.