Importing CSV Files

Deephaven provides tools for inferring a table schema from sample data and importing CSV files.

Quickstart

Here is an example of how to generate a schema from a CSV data file, deploy it, and import a file from the command line. These commands assume a typical Deephaven installation and a sample file located at /data/sample.csv.

Generate a schema from a sample CSV data file

This creates CSVExampleNamespace.CSVExampleTableName.schema in a folder named "CSVExampleNamespace".

Deploy the schema

If the schema is in a different location, update the directory accordingly.

Import a single data file into the specified Intraday partition

This example will generate a table schema from sample.csv with the namespace "CSVExampleNamespace" and table name "CSVExampleTableName". The default behavior creates a directory for the namespace in the current directory and places the output schema file inside this directory. After inspection of the generated schema, it may be deployed. You might want to move this schema file to a standard location for reference. Once deployed, CSV files matching the structure of sample.csv can be imported.

Schema Inference

CSV schema inference generates a table schema from a sample CSV file. A table column is generated for each column in the given sample file, and the process attempts to guess the type for each column from the provided data. Since CSV files provide no metadata, this inference process is necessarily imperfect, and generated schemas should always be inspected before deployment.

Command Reference

The following arguments are available when running the CSV schema creator:

| Argument | Description |

|---|---|

-ns or --namespace <namespace> | (Required) The namespace to use for the new schema. |

-tn or --tableName <name> | (Required) The table name to use for the new schema. |

-sp or --schemaPath <path> | An optional path to which the schema file will be written. If not specified, this will default to the current working directory and will create or use a subdirectory that matches the namespace. |

-sf or --sourceFile <file name or file path and name> | The name of the CSV file to read (required). This file must have a header row with column names. |

-fd or --delimiter <delimiter character> | Field delimiter (optional). Allows specification of a character other than the file format default as the field delimiter. If delimiter is specified, fileFormat is ignored. This must be a single character. |

-ff or --fileFormat <format name> | (Optional) The Apache commons CSV parser is used to parse the file itself. Five common formats are supported:

|

-pc or --partitionColumn | Optional name for the partitioning column if schema is being generated. If this is not provided, the importer will default to "Date" for the partitioning column name. Any existing column from the source that matches the name of the partitioning column will be renamed to "source_[original column name]". |

-gc or --groupingColumn | Optional column name that should be marked as columnType="Grouping" in the schema. If multiple grouping columns are needed, the generated schema should be manually edited to add the Grouping designation to the additional columns. |

-spc or --sourcePartitionColumn | Optional column to use for multi-partition imports. For example if the partitioning column is "Date" and you want to enable multi-partition imports based on the column "source_date", specify "source_date" with this option (this is the column name in the data source, not the Deephaven column name). |

-sn or --sourceName <name for ImportSource block> | Optional name to use for the generated ImportSource block in the schema. If not provided, the default of "IrisCSV" will be used. |

-sl or --skipHeaderLines <integer value> | Optional number of lines to skip from the beginning of the file before expecting the header row. If not provided, the first line is used as the header row. |

-fl or --setSkipFooterLines <integer value> | Optional number of footer lines to skip from the end of the file. |

-lp or --logProgress | If present, additional informational logging will be provided with progress updates during the parsing process. |

-bf or --bestFit | If present, the class will attempt to use the smallest numeric types to fit the data in the CSV. For example, for integer values, short will be used if all values are in the range of -32768 to 32767. If larger values are seen, the class will then move to int and eventually long. The default behavior, without -bf, is to use long for all integer columns and double for all floating point columns. |

-tr or --trim | Similar to the TRIM file format, but adds leading/trailing whitespace trimming to any format. So, for a comma-delimited file with extra whitespace, -ff TRIM would be sufficient, but for a file using something other than a comma as its delimiter, the -tr option would be used in addition to -ff or -fd. |

-om or --outputMode | Either SAFE (default) or REPLACE. When SAFE, the schema creator will exit with an error if an existing file would be overwritten by the schema being generated. When set to REPLACE, a pre-existing file will be overwritten. |

Import Query

A persistent query of type "Import - CSV" is used to import data from a CSV file into an intraday partition. The CSV file must be available on the server on which the query will run; it is not for loading a file on the user's system into Deephaven.

Note

The "Import-CSV" option is only available in Deephaven Classic.

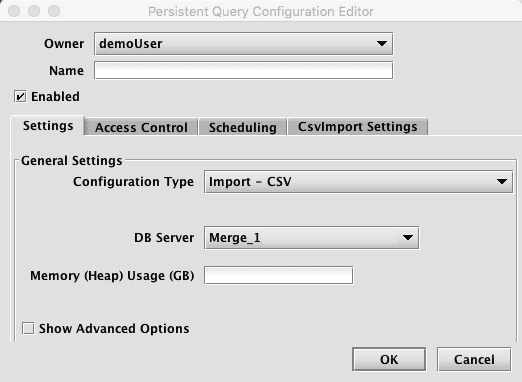

When Import - CSV is selected, the Persistent Query Configuration Editor window shows the following options:

-

To proceed with creating a query to import a CSV file, you will need to select a DB Server and enter the desired value for Memory (Heap) Usage (GB).

-

Options available in the Show Advanced Options section of the panel are typically not used when importing or merging data. To learn more about this section, please refer to the Persistent Query Configuration Viewer/Editor.

-

The Access Control tab presents a panel with the same options as all other configuration types, and gives the query owner the ability to authorize Admin and Viewer Groups for this query. For more information, please refer to Access Control.

-

Clicking the Scheduling tab presents a panel with the same scheduling options as all other configuration types. For more information, please refer to Scheduling.

-

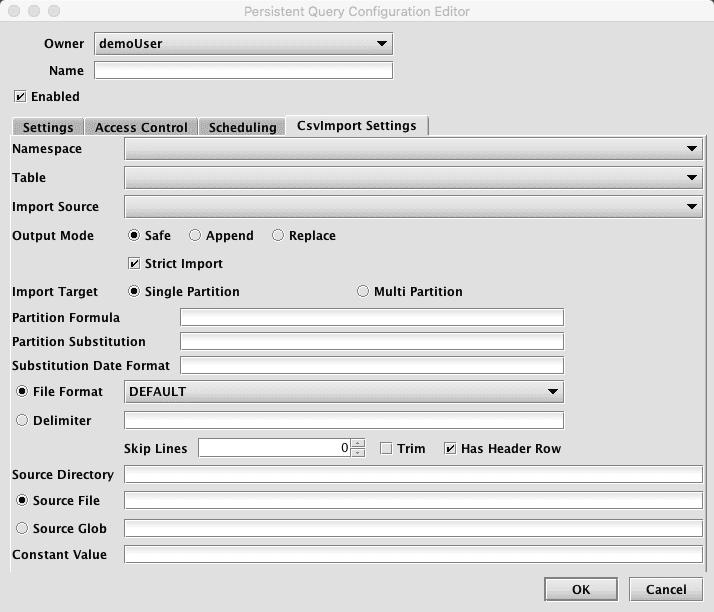

Clicking the CsvImport Settings tab presents a panel with the options pertaining to importing a CSV file:

CsvImport Settings

- Namespace: This is the namespace into which you want to import the file.

- Table: This is the table into which you want to import the data.

- Output Mode: This determines what happens if data is found in the fully-specified partition for the data. The fully-specified partition includes both the internal partition (unique for the import job) and the column partition (usually the date).

- Safe - if existing data is found in the fully-specified partition, the import job will fail.

- Append - if existing data is found in the fully-specified partition, data will be appended to it.

- Replace - if existing data is found in the fully-specified partition, it will be replaced. This does not replace all data for a column partition value, just the data in the fully-specified partition. Import Source: This is the import source section of the associated schema file that specifies how source data columns will be set as Deephaven columns.

- Strict Import will fail if a file being imported has missing column values (nulls), unless those columns allow or replace the null values using a default attribute in the

ImportColumndefinition.

- Single/Multi Partition: This controls the import mode. In single-partition, all of the data is imported into a single Intraday partition. In multi-partition mode, you must specify a column in the source data that will control to which partition each row is imported.

- Single-partition configuration:

- Partition Formula: This is the formula needed to partition the CSV being imported. If a specific partition value is used it will need to be surrounded by quotes. For example:

currentDateNy()"2017-01-01" - Partition Substitution: This is a token used to substitute the determined column partition value in the source directory, source file, or source glob, to allow the dynamic determination of these fields. For example, if the partition substitution is "PARTITION_SUB", and the source directory includes "PARTITION_SUB" in its value, that PARTITION_SUB will be replaced with the partition value determined from the partition formula.

- Substitution Date Format: This is the date format that will be used when a Partition Substitution is used. The standard Deephaven date partition format is

yyyy-MM-dd(e.g., 2018-05-30), but this allows substitution in another format. For example, if the filename includes the date inyyyyddMMformat instead (e.g., 20183005), that could be used in the Date Substitution Format field. All the patterns from the JavaDateTimeFormatterclass are allowed.

- Partition Formula: This is the formula needed to partition the CSV being imported. If a specific partition value is used it will need to be surrounded by quotes. For example:

- Multi-partition configuration:

- Import Partition Column: This is the name of the database column used to choose the target partition for each row (typically "Date"). There must be an corresponding Import Column present in the schema, which will indicate how to get this value from the source data.

- Single-partition configuration:

- File Format: This is the format of the data in the CSV file being imported. Options include

DEFAULT,TRIM,EXCEL,TDF,MYSQL,RFC4180, andBPIPE*. - Delimiter: This can be used to specify a custom delimiter character if something other than a comma is used in the file.

- Source Directory: This is the path to where the CSV file is stored on the server on which the query will run.

- Source File: This the name of the CSV file to import.

- Source Glob: This is an expression used to match multiple CSV file names.

- Constant Value: A String of data to make available as a pseudo-column to fields using the CONSTANT sourceType.

* Note: BPIPE is the format used for Bloomberg's Data License product.

Importing Using Builder

CSV imports may be performed from inside a Deephaven Groovy or Python script. Compared to other methods, this permits more elaborate logic with respect to existing data. These scripts may be executed as a persistent query or from the command line using the iris_exec run_local_script tool. All imports should be performed as the dbmerge user (or from a persistent query, running on a merge server).

Example

The following script imports a single CSV file to a specified partition:

Import API Reference

The CSV import class provides a static builder method, which produces an object used to set parameters for the import. The builder returns an import object from the build() method. Imports are executed via the run() method and if successful, return the number of rows imported. All other parameters and options for the import are configured via the setter methods described below. The general pattern when scripting an import is:

CSV Import Options

| Setter Method | Type | Req? | Default | Description |

|---|---|---|---|---|

setDestinationDirectory | File | String | No* | N/A | The destination directory. e.g., /tmp/mylocation. |

setOutputMode | ImportOutputMode | String | No | SAFE | Enumeration with the following options: ImportOutputMode.SAFE | ImportOutputMode.REPLACE | ImportOutputMode.APPEND. May also be specified as String (e.g.,"SAFE"/"REPLACE"/"APPEND"). |

setStrict | boolean | No | true | If true, will allow target columns that are missing from the source to remain null, and will allow import to continue when data conversions fail. In most cases, this should be set to false only when developing the import process for a new data source. |

setPartitionColumn | String | No | N/A | Column name to use to choose which partition to import each source row. |

setSourceName | String | No | N/A | Specific ImportSource to use (from the table schema). If not specified, the importer will use the first ImportSource block that it finds that matches the type of the import (CSV/XML/JSON/JDBC). |

setSourceDirectory | String | No* | N/A | Directory from which to read source file(s). |

setSourceFile | String | No* | N/A | Source file name (either full path on server filesystem or relative to specified source directory). |

setSourceGlob | String | No* | N/A | Source file(s) wildcard expression. |

setFileFormat | String | No | DEFAULT | The Apache commons CSV parser is used to parse the file itself. Five common formats are supported:

|

setDelimiter | char | No | , | Allows specification of a character other than the file format default as the field delimiter. If delimiter is specified, fileFormat is ignored. This must be a single character. |

setSkipLines | int | No | 0 | Number of lines to skip before beginning parse (before header line, if any). |

setTrim | boolean | No | false | Whether to trim whitespace from field values. |

setConstantColumnValue | String | No | N/A | A String to materialize as the source column when an ImportColumn is defined with a sourceType of CONSTANT. |

* The sourceDirectory parameter will be used in conjunction with sourceFile or sourceGlob. If sourceDirectory is not provided, but sourceFile is, then sourceFile will be used as a fully qualified file name. If sourceDirectory is not provided, but sourceGlob is, then sourceDirectory will default to the configured log file directory from the prop file being used.

Import from Command Line

CSV imports can be performed directly from the command line, using the iris_exec tool.

Command Reference

CSV Import Arguments

The CSV importer takes the following arguments:

| Argument | Description |

|---|---|

| Either a destination directory, specific partition, or internal partition plus a partition column must be provided. A directory can be used to write a new set of table files to specific location on disk, where they can later be read with TableTools. A destination partition is used to write to intraday locations for existing tables. The internal partition value is used to separate data on disk; it does not need to be unique for a table. The name of the import server is a common value for this. The partitioning value is a string data value used to populate the partitioning column in the table during the import. This value must be unique within the table. In summary, there are three ways to specify destination table partition(s):

|

-ns or --namespace <namespace> | (Required) Namespace in which to find the target table. |

-tn or --tableName <name> | (Required) Name of the target table. |

-fd or --delimiter <delimiter character> | Field Delimiter (Optional). Allows specification of a character other than the file format default as the field delimiter. If delimiter is specified, fileFormat is ignored. This must be a single character. |

-ff or --fileFormat <format name> | (Optional) The Apache commons CSV parser is used to parse the file itself. Five common formats are supported:

|

-om or --outputMode <import behavior> | (Optional):

|

-rc or --relaxedChecking <TRUE or FALSE> | (Optional) Defaults to FALSE. If TRUE, will allow target columns that are missing from the source CSV to remain null, and will allow import to continue when data conversions fail. In most cases, this should be set to TRUE only when developing the import process for a new data source. |

| sourceDirectory, sourceFile, and sourceGlob are all optional. If none of these are provided, the system will attempt to do a multi-file import. Otherwise, sourceDirectory will be used in conjunction with sourceFile or sourceGlob. If sourceDirectory is not provided, but sourceFile is, then sourceFile will be used as a fully qualified file name. If sourceDirectory is not provided, but sourceGlob is, then sourceDirectory will default to the configured log file directory from the prop file being used. |

-sn or --sourceName <ImportSource name> | Specific ImportSource to use. If not specified, the importer will use the first ImportSource block that it finds that matches the type of the import (CSV/XML/JSON/JDBC). |

-tr | Similar to the TRIM file format, but adds leading/trailing whitespace trimming to any format. So, for a comma-delimited file with extra whitespace, -ff TRIM would be sufficient, but for a file using something other than a comma as its delimiter, the -tr option would be used in addition to -ff or -fd. |

-cv or --constantColumnValue <constant column value> | A literal value to use for the import column with sourceType="CONSTANT", if the destination schema requires it. |

One special case when importing CSV data is columns in the target table with a type of Boolean.

- By default, the CSV importer will attempt to interpret string data from the source table with 0, F, f, or any case of

false, being written as a Booleanfalse. - Similarly, 1, T, t, or any case of

truewould be a Booleantrue. - Any other value would result in a conversion failure that may be continuable, if, for instance, a default is set for the column.

- To convert from other representations of true/false, for example from foreign language strings, a formula or transform would be needed, along with a

sourceType="String"to ensure the reading of the data from the CSV field is handled correctly.