How to configure and use a Development Persistent Query-based DIS Instance

Background

Historically, development and testing of new schemata and logging processes in Deephaven had to occur in a separate development environment to avoid the disruption of restarting a production Data Import Server (DIS) during business hours. With the addition of the in-Worker DIS process to Deephaven v1.20181212, it is now possible to run multiple DIS instances that can be restarted or otherwise reconfigured without impacting production data handling.

Prerequisites

- Deephaven v1.20190607 or later release installed.

- Installation configured to use the Data Routing Service (YAML-based configuration). See Data Routing Service Configuration via YAML.

- Entries defined in the Routing Service YAML file for one or more in-Worker DIS processes:

- Storage

- DIS Instance

- Table Data Services

- Filters to ensure development schemata are handled exclusively by the in-Worker DIS. The easiest way to configure this is to have one or more development namespaces that are routed to the in-Worker DIS, and then move the related tables to a production namespace when development is complete. See Data Filters.

Some of the elements above are listed as prerequisites so they can be set ahead of time without requiring changes to the Data Routing Service YAML file during development work.

Example

The snippets from a Routing Service YAML file (shown below) define the default production DIS (running as a monit-controlled process) - db_dis - and an in-Worker DIS - test_LastBy in the dataImportServers section. test_LastBy, as the name implies, can also provide Import-driven lastBy processing.

The filters used in both the DIS and TDCP (Table Data Cache Proxy) configuration sections basically split System (non-user) namespaces into DXFeed (processed by test_LastBy) and everything else (processed by db_dis). For querying, most data is routed through the TDCP, but the DXFeed development namespace is routed directly to the test_LastBy DIS. Working directly with the DIS sacrifices some caching performance for DXFeed queries, but allows for the schema to be updated and data to be deleted and replaced without requiring a TDCP restart.

Use

Once this routing is set up, tailers writing to DXFeed tables will send their data to the test_LastBy DIS instance, and intraday queries for DXFeed data will also receive that data from test_LastBy. As such, DXFeed schemata, loggers, and listeners can be modified mid-day, and the test_LastBy persistent query can be restarted to pick up the changes without impacting all other namespaces which are being handled by the db_dis default DIS instance.

The script below can be used in a persistent query or console query to run the test_LastBy DIS process:

The bottom part of this script creates an Import-driven lastBy table called DXQuoteLastByTable, and, if this is running in a persistent query, that table can be read by other users that have access rights for the query. The lastBy table portion of the script is not required to enable the DIS process; once dis.start() is executed, the DIS process will begin accepting and processing tailer data streams for tables in the DXFeed namespace.

The Data Services persistent query type (available in Deephaven v1.20190322 or higher) simplifies configuration of an in-worker DIS process by setting up much of the prerequisite script code for the user. For the same configuration as shown above, a Data Services query would require only this script:

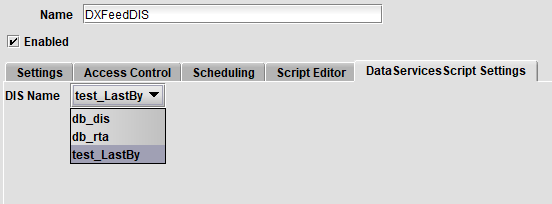

The rest of the work to import classes and configure and start the DIS process are handled by the built-in setup code of the Data Services script type itself. The one other piece of configuration that is required with this script type is to select the DIS process on the DataServiceScript Settings tab: