Set up a replayer

One of the first problems most Deephaven Users will need to solve is “Where is my Data?”. There may already be jobs that ingest data to populate tables, or perhaps you want to experiment with data from a new source that has not yet been integrated with your system.

Additionally, you may want to replay a previous day’s data so that you can:

- Test changes to a script against known data.

- Troubleshoot issues that a query had on a previous day’s data.

- Simulate data ticking into a table for the current date using previous data.

What is a Replay Query?

A Replay Query is a query that replays historical data for a specific table as intraday ticking data. It will release the rows to the query at the time those rows actually appeared based upon their Timestamp column. This way you can take an existing query that expects to operate on intraday data and execute it on historically merged data.

A small variation of this with some additional scaffolding can allow you to replay that historical data into another table replacing original timestamps with current-day timestamps.

At its core, the replay query redirects the db.i() command to load the historical version of the table (db.t()) instead. Then it applies a special where() clause that releases rows based upon their configured timestamp.

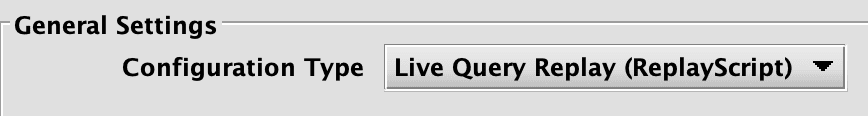

For this, all you must do is create a new Query and set the type to “Live Query Replay”, then put the code that you want to test into the Script Editor.

Caution

Take note that the “Replay Query” type is currently only configurable via the Swing UI.

The next step is to configure the replay settings. There are three parameters:

- “Sorted Replay”

- “Replay Time of Day”

- “Replay Date”

Sorted Replay

The Sorted Replay checkbox will guarantee that the data will be replayed in Timestamp order - that is, in the order of the table, sorted by the configured timestamp column.

Replay Time Of Day

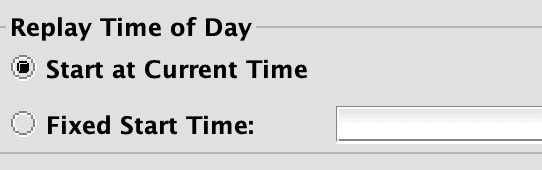

The Replay Time Of Day configuration provides two options that control the time of day that the script will begin at.

- Start at Current Time - The query is executed using the current time of day.

- Fixed Start Time - The query is executed using the value entered as the current time of day.

This is useful if you need to replay data that streamed initially in a different region (Hong Kong for example) than the time zone you are testing in. To set a specific start time, enter time in the format: HH:MM:SS

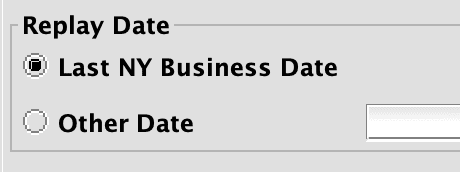

Replay Date

The Replay date option controls the date that is returned by DQL’s date methods such as currentDateNy() and lastBusinessDateNy().

Replaying Historical Data

The simplest use case for a Replay Query is to replay a historical data set directly to a query script. If your script already uses DQL’s date methods then you will not need to do any additional work to select the appropriate date partition for replay. If you have not, then you must analyze your query and ensure it is selecting the correct playback date by hand.

For example, if you are manually selecting dates, as in:

Note

Call the historical table but replace the db.t() with db.i().

You will need to change the selected date in the .where() clause manually. A good pattern to follow in your queries, if currentDateNy() is not sufficient for your needs, is to store the date partition in a local variable and reuse it throughout the query:

This makes it easier to change the operational date uniformly throughout the query.

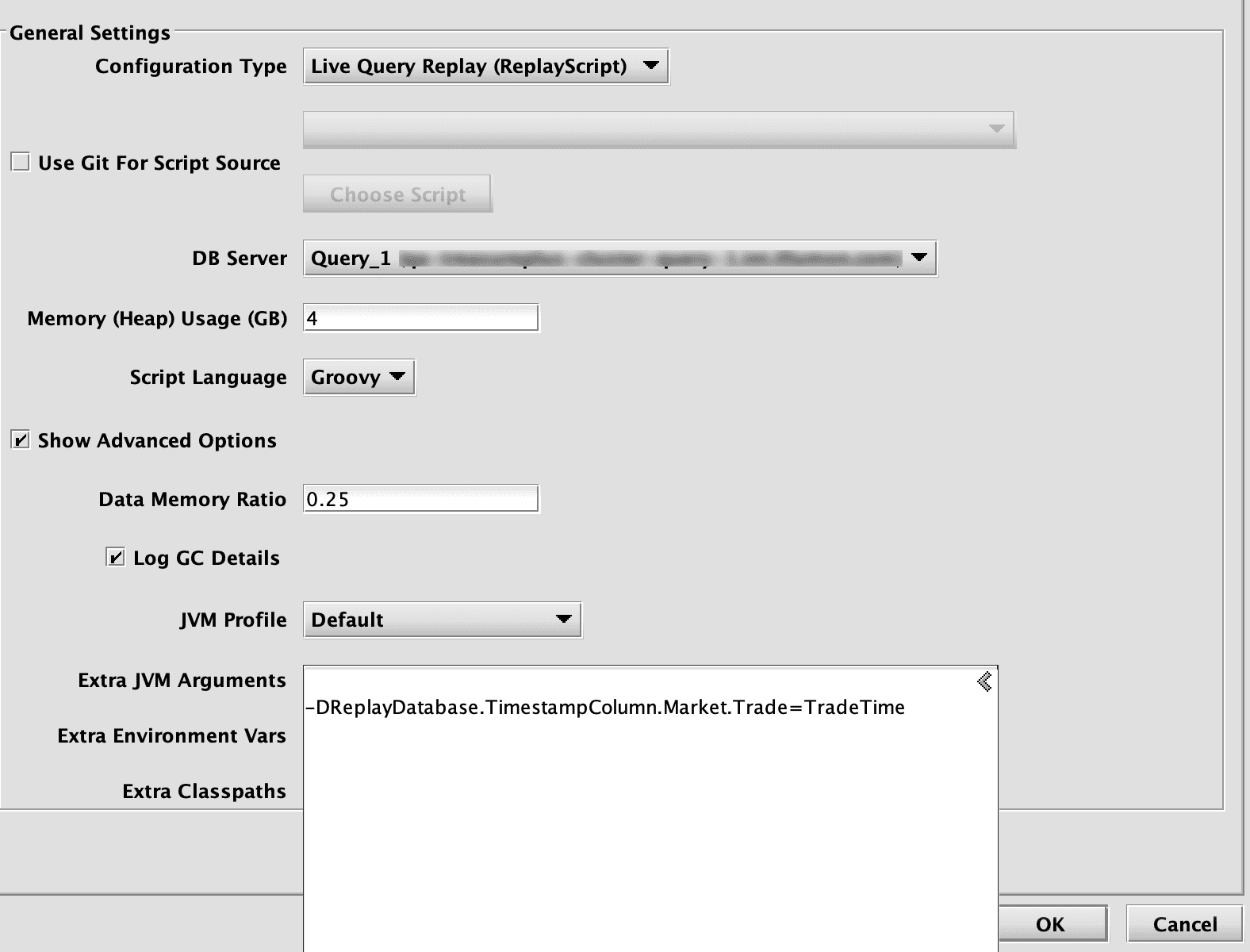

My Timestamp Column is not ‘Timestamp’

A Replay Query will assume that the replay Timestamp column in all tables is "Timestamp” unless you have defined it otherwise. If your Timestamp column is named something else - say “TradeTime” - you must tell the query that. This must be done by adding additional arguments to the “Extra JVM Arguments” of the query. You should add this for each table that does not have a Timestamp column.

For example:

After this point, save your query and your data will be replayed to the query script.

Simulating Intraday Data With Replay

While it is often useful to simply replay historical data to a script, it may also be useful to use a historical replay to simulate current intraday data. This use case is very similar to the Replay query described in the previous exception except that instead of streaming data from db.i() directly into a script, we will re-log it and transform timestamps to match today’s date and time.

This has the advantage of allowing you to run unmodified queries against simulated data with a little bit more complexity and configuration.

First we must configure the schema for the table you want to replay with to enable TableLogger generation, then create a Schema for the simulated table to write to. Here is an example schema with this update:

The addition of the tableLogger=”true" clause enables the ability to log Tables directly to binary log files. You could replay data directly back to this Namespace and table, however it is not recommended to do so. It is best to explicitly separate simulated or replayed data from actual data. This can be done simply by adding a new schema using CopyTable with a new namespace. Here is an example:

This will add a new system table to Deephaven under the namespace “MarketSim” that you can point your queries to.

Next, configure a replay query exactly as in the previous section. Write a script that pulls the table from the original namespace and simply logs it and its updates back to a binary log file. See below for an example in Groovy:

There is one final thing to account for: if your table contains any columns that are DbDateTime columns, you will probably want to convert these into values with the current date, but same original timestamp. This requires a little bit of boilerplate code explained below:

This final example will replay the table to the MarketSim namespace and also convert every DBDateTime column it finds to the current date at the same time.