Monitoring persistent query performance with dashboards

This guide will show you how to monitor the performance of your persistent queries by creating a dashboard of Deephaven's internal tables. These tables include heap usage and worker, port, server, and host information. The same source data can be used to drill down into the performance of a specific query worker, as discussed in our Troubleshooting and monitoring queries with internal tables guide.

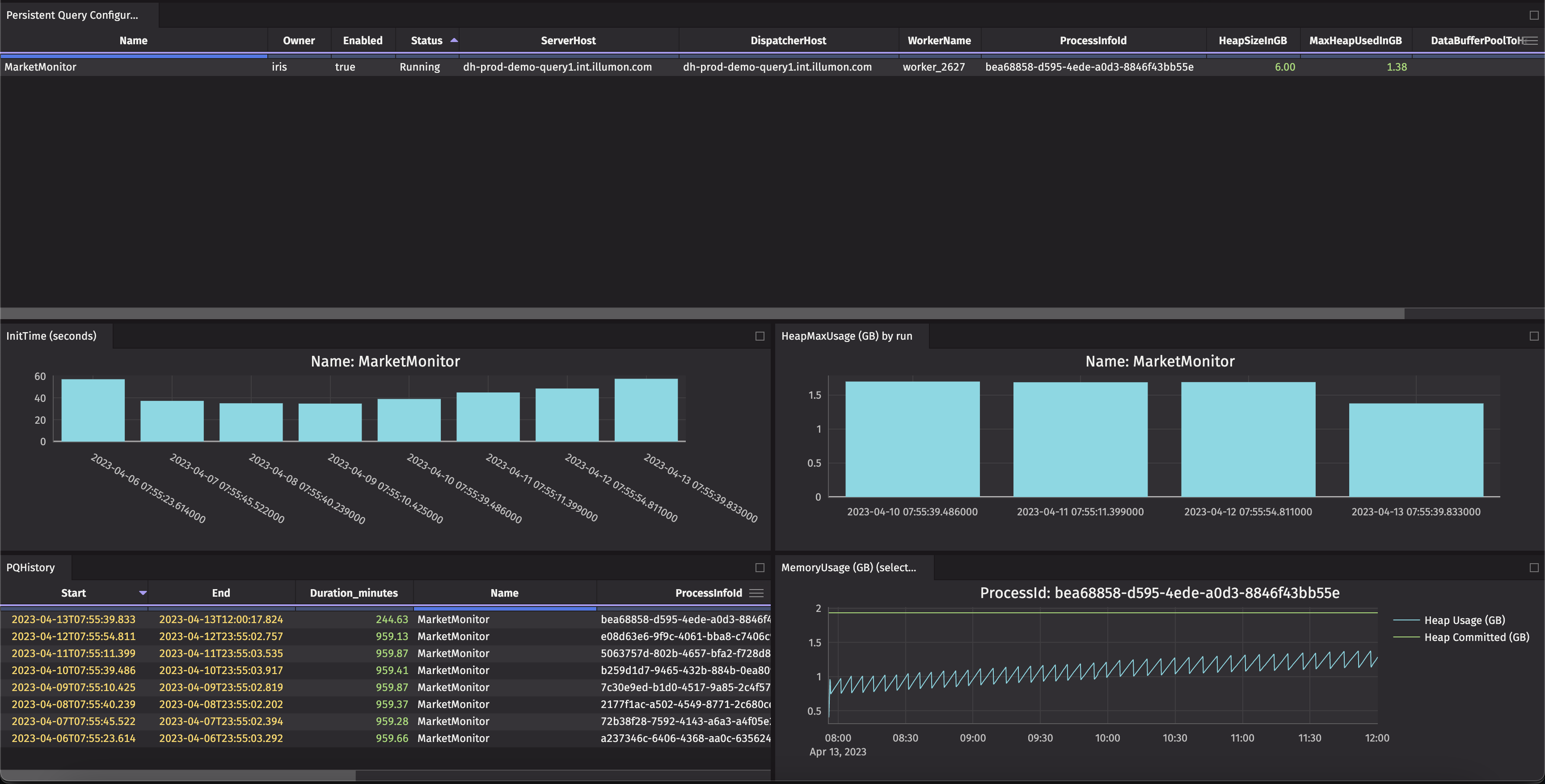

Dashboard for monitoring persistent query memory and initialization times

The following code examples creates a dashboard including graphs of initialization times and heap usage of the last seven days of a specific PQ, and a graph of the heap usage throughout the day of a single run.

The two tables, PQHistory and Persistent Query Configurations, include helpful data such as query status (running, failed,

etc.) and the amount of memory configured vs amount of memory currently used.

Expand for full query:

To filter the graphs to a specific query, use a OneClick panel or or the Linker tool to link the graphs to the dashboard's PQHistory and PQ configuration tables.

Underlying data

This dashboard uses several tables within the DbInternal namespace. It can be helpful to know what data

is available in case you want to create your own views.

- The PersistentQueryConfigurationLogV2 table contains the configuration information for all persistent queries. This includes the owner, what server it will run on, when it was last modified and by whom, etc.

- The PersistentQueryStateLog

shows the status (

Running,Initializing,Failed, etc.) of all persistent queries. - The ProcessMetrics table contains (among many other metrics) the heap usage data used in this dashboard.

More information can be found in the troubleshooting documentation.

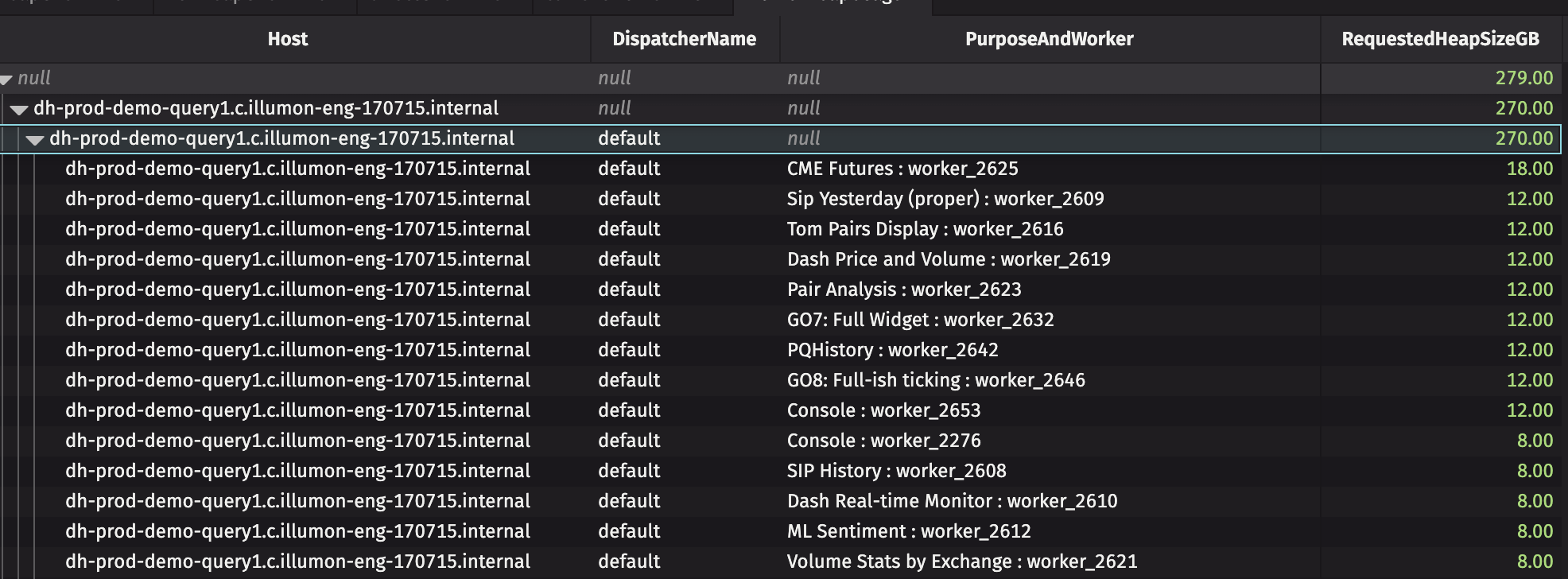

Dashboard for monitoring server usage

This dashboard calculates the heap usage of all persistent queries and breaks it down by server. This can help in load balancing your Deephaven cluster. It also shows all current workers and their server hosts, heap allocation, and PQ name (if applicable).

Expand for full query:

Underlying data

This dashboard uses several tables within the DbInternal namespace. It can be helpful to know what data

is available in case you want to create your own views.

-

The ProcessEventLog table contains the logs (

stout,stderr, etc.) of all query workers. We use it here to get a table of theProcessInfoId,Host, andProcess(the name, e.g.worker_1523) of all query workers. -

The AuditEventLog: this dashboard uses the

Starting workerevent to get the server port and process name of the worker. -

The QueryPerformanceLog table contains query-level performance metrics. It's used here to get the

RequestedHeapSizeof each worker. -

The PersistentQueryStateLog (see above) is used to get the name of each PQ.