It's hard to avoid the “crypto buzz” that is filling the news and discussion in the world these days. With the release of a powerful, real-time data engine like Deephaven Community, we figured you should know how to pull in both historical and live crypto data. You can navigate to our ready-to-go Docker container and look at our Cryptocurrency history example and read along for more information.

Preparing the example

First, make sure you have the Python version of Deephaven running with our example data. For more on how to start that, see our quick start. Navigate to the directory /data/examples/CryptoCurrency/, as we will look at the files you need to know about and learn how to modify them to make your own analysis.

The script will pull live and historical data for specified cryptocurrencies from the CoinGecko website into Deephaven. We are using Deephaven's Application Mode, which allows the Python script to run before we enter the session so that the tables are ready for use.

There are a lot of cryptocurrencies to track. There are also a lot of Crypto APIs to use to pull data. We decided on CoinGecko since they have one of the most comprehensive lists on their site, including, of course, the well-known bitcoin, or one of over 9000 more assets.

Inside the directory /data/examples/CryptoCurrency/ notice the Dockerfile. This file takes the Deephaven base image and extends it to work with CoinGecko. We are also utilizing application mode to copy the needed files directory into the Docker container.

FROM ghcr.io/deephaven/server

COPY app.d/ /app.d

RUN pip3 install pycoingecko

The app.d directory contains the meat of the script crypto.py. This script is modifiable; changes to the file can be seen directly in the Deephaven IDE upon starting the session.

We decided in this example to track just a few coins to show the script's simplicity. To change the coins, go into the id list of the crypto.py file and add or remove the ones you want. Below, you can see we are tracking just nine coins.

ids = ['bitcoin', 'ethereum', 'litecoin', 'dogecoin', 'tether', 'binancecoin', 'cardano', 'ripple', 'polkadot']

Another key variable is secondsToSleep. This is an integer number of seconds between data pulls. The CoinGecko free API has a rate limit of 50 calls/minute. Too frequent requests will generate the HTTP status: '429 Too Many Requests'. This variable needs to be balanced with the number of coins observed. If you exceed the rate limit, you'll be blocked until the next 1-minute window. Price, trading volume, and market capitalization are updated every one minute or so, so you don't need to make this value incredibly small.

There are only two methods in the script: one to create a table of historical values called get_coingecko_table_historical, and another for live data that pulls in at regular intervals called get_coingecko_table_live. To limit the results to only live data, simply substitute getHistory = False to skip getting historical data.

After you've configured ids, secondsToSleep, and getHistory, it's time to run the script.

From the directory, the simple shell script start.sh launches the entire package. Run:

sh start.sh

Go to http://localhost:10000/ide to view the tables in the top right Panels tab!

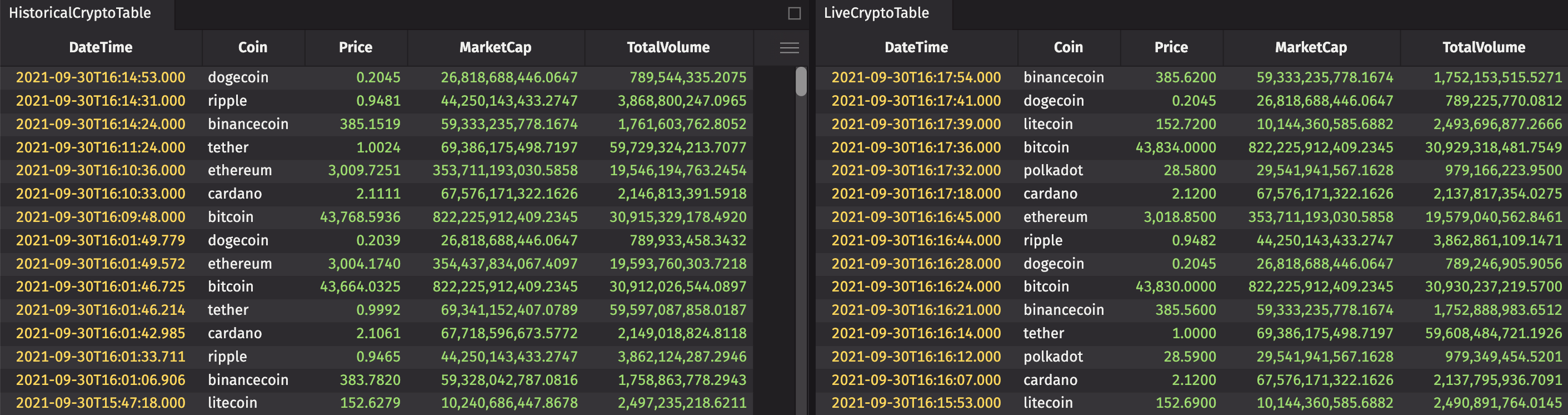

If you have both the historical and live tables, you should see something like this:

As time moves forward, the live table adds more data. This is doing a call to CoinGecko every secondsToSleep seconds. If the data changes, then it will be loaded into the table. Repeat data is not included, so the more active the coin, the more data will be collected.

Now you can take the data and make joins, filters, summaries, and plots like all your other powerful Deephaven projects. Check out our full user documentation to learn about Deephaven Query Language methods and UI features to work with data.

Further reading

- Learn more about Application Mode.

- Learn more about the Deephaven Core API.