The biggest players in the crypto space all use AI to predict prices and manage investments. So should you. Doing so isn't as difficult as you might think.

With the right AI, crypto portfolio management becomes easier and safer.

This is the second of a six-part blog series on real-time crypto price predictions with AI. In this blog, I'll build a model that can predict crypto prices with TensorFlow. Keep up with the blog series:

- Acquire up-to-date crypto data with Apache Airflow

- Implement real-time AI with TensorFlow

- Implement real-time AI with Nvidia RAPIDS

- Test the models on simulated real-time data

- Implement the models on real-time crypto data from Coinbase

- Share AI predictions with URIs

Crypto price prediction model

I've got up-to-date BTC data in Apache Parquet format. It's time to do the fun part: build a machine learning model.

I will use TensorFlow to build an LSTM model. In the next blog in the series, I'll implement the equivalent model with Nvidia RAPIDS.

The models are tested using Deephaven as a Python library. Additionally, I'm using Windows and a CUDA-enabled GPU, and thus have to configure my GPU and WSL2. For a simple example of using pip-installed Deephaven with the GPU, check out my previous blog.

It doesn't matter which AI library you want to use, the workflow is the same:

- Import and start a Deephaven server.

- Scale a subset of the data and split into train/test sets.

- Scale the data.

- Construct a simple LSTM model.

- Define a function to train the model - this requires a second function to convert table data to a NumPy ndarray.

- Train the model via

deephaven.learn.

TensorFlow

This code implements a simple LSTM model in TensorFlow. The model is trained using scaled BTC price data in a Deephaven table. TensorFlow's Keras package also comes with the TimeseriesGenerator method, which makes converting a 1-D array of data into the 3-D array typically required by LSTM models simple.

LSTM in TensorFlow

from deephaven_server import Server

s = Server(port=10_000, jvm_args=["-Xmx4g"])

s.start()

from deephaven import ugp

ugp.auto_locking = True()

# Deephaven imports

from deephaven import parquet as dhpq

from deephaven import numpy as dhnp

from deephaven.learn import gather

from deephaven import learn

# Python imports

from keras.preprocessing.sequence import TimeseriesGenerator

from tensorflow.keras.models import Sequential

from sklearn.metrics import mean_squared_error

from sklearn.preprocessing import MinMaxScaler

from tensorflow.keras.layers import Dense

from keras.layers import LSTM

import tensorflow as tf

import numpy as np

import threading

import glob

import time

import os

# Get the most up-to-date btc data (replace the next line with your own file location)

list_of_files = glob.glob('/mnt/c/Users/yuche/all_data/*')

latest_file = max(list_of_files, key=os.path.getctime)

result = dhpq.read(latest_file).reverse().tail_pct(0.02)

# Scale the data and split into train/test sets

scaler = MinMaxScaler(feature_range=(-1, 1))

scaled_price = scaler.fit_transform(dhnp.to_numpy(result.view(["Price"])).reshape(-1, 1))

def get_scaled_price(scaled_price, i):

return scaled_price[i].item()

result = result.update(["ScaledPrice = (double)get_scaled_price(scaled_price, i)"])

train_dh = result.head_pct(0.7)

test_dh = result.tail_pct(0.3)

# Define the model

n_input = 4

n_features = 1

model = Sequential()

model.add(LSTM(100, activation='relu', input_shape=(n_input, n_features)))

model.add(Dense(1))

model.compile(optimizer='adam', loss='mse')

# Convert Deephaven columns of doubles to a 2d NumPy array

def table_to_numpy_double(rows, cols):

return gather.table_to_numpy_2d(rows, cols, np_type=np.double)

# Train the model in a function

def train_model(data):

new_data=data.reshape(-1,1)

generator = TimeseriesGenerator(new_data, new_data, length=n_input, batch_size=1)

model.fit(generator, epochs = 50)

learn.learn(

table = train_dh,

model_func = train_model,

inputs = [learn.Input("ScaledPrice", table_to_numpy_double)],

outputs = None,

batch_size = train_dh.size

)

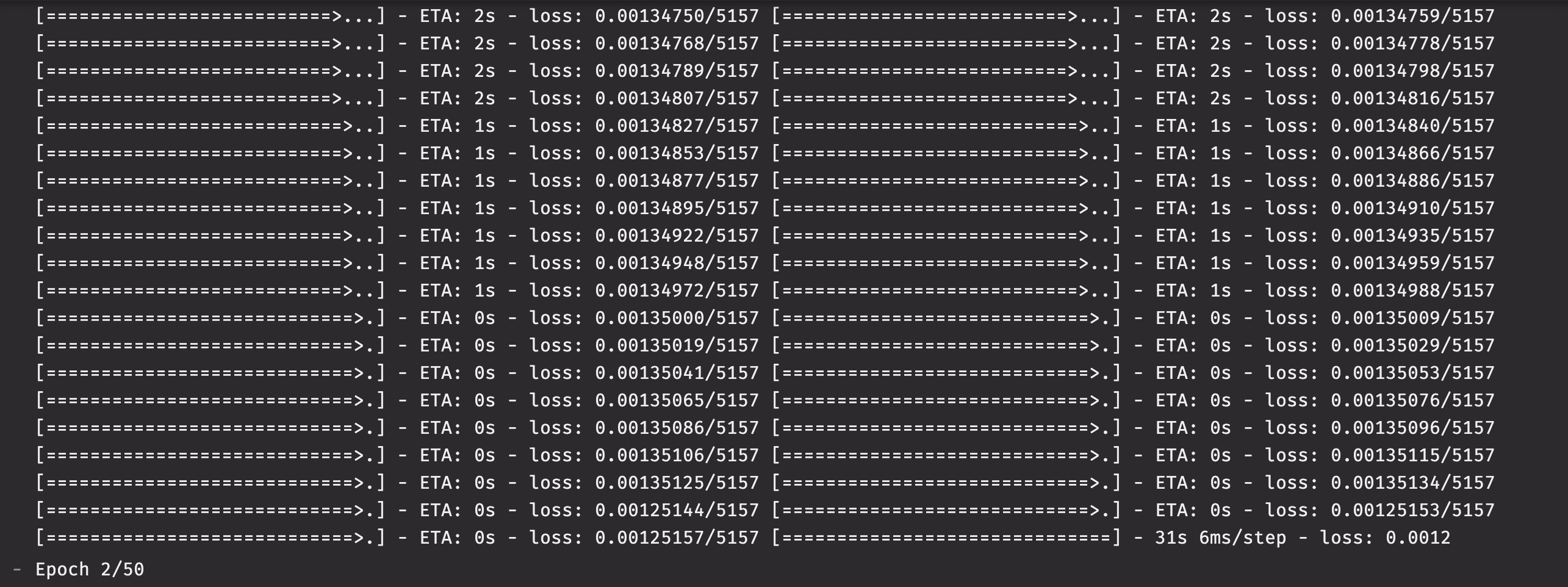

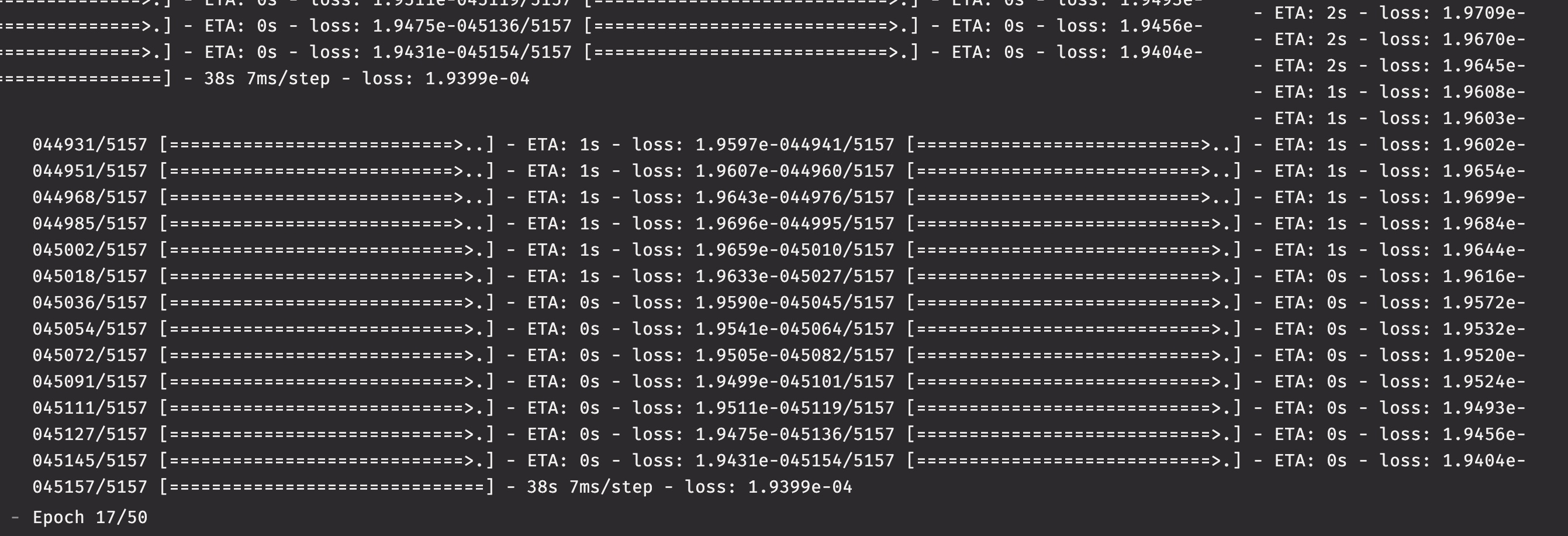

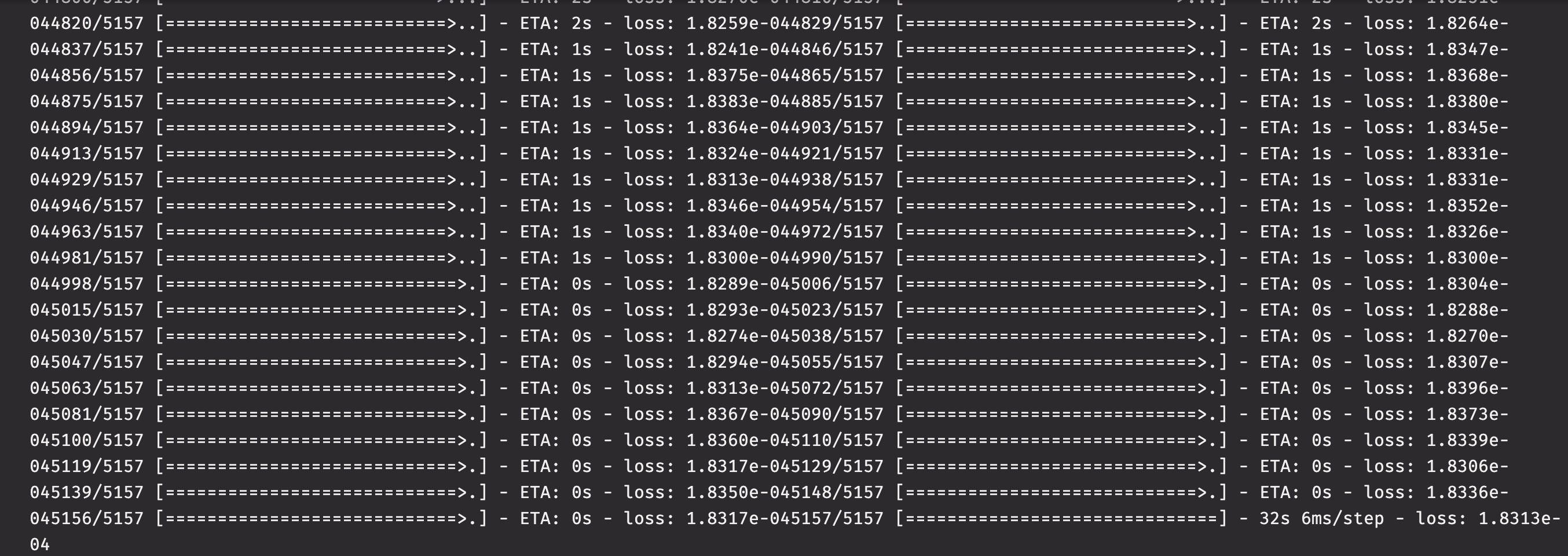

Here are some screenshots from the model's training. The first screenshot shows the loss after one training epoch. The second shows loss after the 17th epoch. The final shows the loss after the 50th and final epoch. The loss isn't much lower from epochs 17 to 50. The diminishing returns show that, for this model and data, 50 epochs is a bit of overkill.

What's next?

So far we've got a workflow for acquiring up-to-date crypto data and two models built. Next, we'll cover Nvidia RAPIDS. After that comes results on both simulated and true real-time data. And what good would results be if you couldn't share them? We'll finish the series by showing how you can share your work via Deephaven's URI package.

Feel free to try the scripts and make your own Crypto price prediction model. Contact us on Slack if you have any questions or feedback.