Python has been among the most popular programming languages for some time now, largely because of its ease of access, readability, and incredible wealth of packages at its users' fingertips. Despite its popularity, Python is derided for being slow. This isn't without reason. Python's global interpreter lock (GIL) can make single-threaded code faster, but prevents concurrency. Deephaven's engine takes full advantage of concurrency, so that benefit can be lost in Python queries. This is also not the only limitation introduced by Python in Deephaven queries. In this blog, I'll talk about the limitations, and how to circumvent them for lightning fast queries.

Deephaven Python queries can be sped up by limiting the following:

- Time spent within the GIL

- The number of Python-Java boundary crossings

It turns out that these can both be minimized with the same solution.

You'll see big speedups in your queries by using built-in methods in your query strings.

Deephaven's Python API

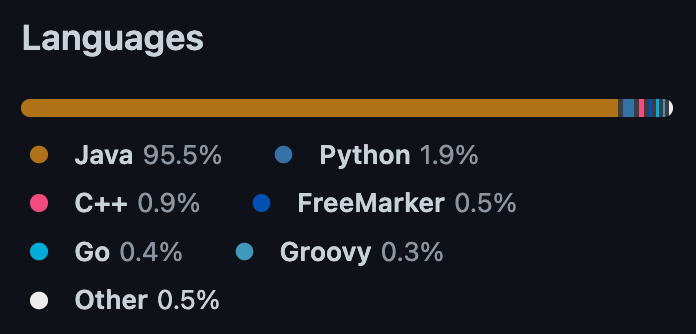

Deephaven's query engine is implemented largely in Java.

The Python API wraps the engine and its table API in Python so that users like you and I can perform powerful real-time analyses with the language we know and love. This has two beneficial effects:

- Real-time data analysis is done with familiar Pythonic syntax.

- Table operations aren't performed outside of the GIL.

The second bullet point is worth discussing further.

The Python GIL

Python's global interpreter lock (GIL) is a global mutex that prevents multiple threads from operating on the same data sequentially. The main purpose of any mutex in multi-threaded code is to prevent this from happening. Per the Python wiki:

It is only in multithreaded programs that spend a lot of time inside the GIL, interpreting CPython bytecode, that the GIL becomes a bottleneck.

What does it mean to "spend time inside the GIL"? Since the GIL is global, it prevents Python threads from ever executing simultaneously. In other programming languages, there is no global lock, so threads will run simultaneously if you tell them to. So, to circumvent the GIL, you can make Python code call non-Python code. Your code will then be limited only by the GIL during the time that Python takes to call another language.

In Deephaven Python queries, when a table operation is called, Java code is called under the hood. Thus, your code, by definition, will only be limited by the amount of time it takes Python to call that Java code, right? Well, yes, but with an important caveat.

The Python-Java boundary

Deephaven's Python API has to be able to call Java code in some way. Not only that, but the query engine has to be able to call Python code from Java in some way. Deephaven accomplishes this through the use of jpy, a bi-directional Python-Java bridge that allows Python code to call Java and vice versa.

Let's look at an example where the Python-Java bridge is crossed in both directions:

from deephaven import empty_table

import numpy as np

source = empty_table(32).update(["X = 0.1 * i", "Y = (double)np.sin(X)"])

What's going on here?

- The Deephaven Python empty_table function calls the underlying Java method to create an empty table with a given number of rows (32).

- The GIL only affects the amount of time it takes for the Python API to call the underlying Java method, and to return a Python-wrapped resultant table. These are trivial.

- The Deephaven Python update function calls the underlying Java method to add columns to the

sourcetable. In this case, those columns areX, which is a tenth of the row index, andY, which is the sine ofX. It's important to note that NumPy's sine function is called here.- The code is limited by the GIL first when the Python API spends time calling the underlying Java

updatemethod, and later when returning a Python-wrapped result. These are trivial. - The code is also limited by any Python code in the second query string. Deephaven's query language implements Java, so in the case of the

Ycolumn, the Java code under the hood has to call Python code. Thus, the creation of theYcolumn is limited by the GIL, since it's Python code being executed by Java. - The second query string is also limited by the Python-Java boundary. Query strings that call Python have to cross the Python-Java boundary in both directions. While the amount of time to do this is pretty small, it can happen more than once for a single query string. For sufficiently large data, this is done in chunks; sufficiently large tables contain many of these chunks.

- The code is limited by the GIL first when the Python API spends time calling the underlying Java

In a query that only creates a 32 row static table with two columns, any Python slowdown is irrelevant. Any software language will complete a set of instructions like this in a trivial amount of time. In larger queries, this will add up.

Efficient queries will cross the Python-Java boundary as few times as possible by using built-in query language methods. In the case of the code above, it can be sped up by using the built-in sine function.

from deephaven import empty_table

source = empty_table(32).update(["X = 0.1 * i", "Y = sin(X)"])

Deephaven DateTimes

The most common cause of real-world query performance bottlenecks in Python are during the processing of DateTimes. Deephaven's DateTime type has many useful and powerful methods associated with it; it makes time-series processing and filtering of data easy. As mentioned previously, the deephaven.time module has all of this stuff. But, once again, it shouldn't be used in query strings. Let's find out why.

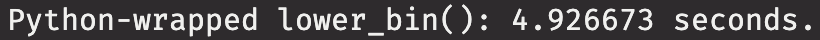

The code below performs a lower_bin to round each DateTime in the Timestamp column of the source table down to the nearest 10 minute bin. The source table has 604,801 rows (one week in seconds). The operation is done multiple times on a large table and timed to see how long it takes, on average, to finish.

from deephaven import time as dhtu

from time import time

def nmin_lower_bin(t, n):

return dhtu.lower_bin(t, n * dhtu.MINUTE)

base_time = dhtu.to_datetime("2023-05-01T00:00:00 NY")

source = empty_table(604_801).update(["Timestamp = base_time + i * SECOND"])

start = time()

for idx in range(10):

result = source.update(["LowerBin_10Min = (DateTime)nmin_lower_bin(Timestamp, 10)"])

end = time()

elapsed = (end - start) / 10

print(f"Python-wrapped lower_bin(): {(elapsed):3f} seconds.")

About 5 seconds to process >600k rows of data. That's over 100k rows/second! That must be fast, right?

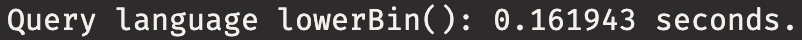

This time around, we use the lowerBin method built into the query language. The rest of the code is the same.

from deephaven import empty_table

from deephaven.time import to_datetime

from time import time

base_time = to_datetime("2023-05-01T00:00:00 NY")

source = empty_table(604_801).update(["Timestamp = base_time + i * SECOND"])

start = time()

for idx in range(10):

result = source.update(["LowerBin_10Min = lowerBin(Timestamp, 10 * MINUTE)"])

end = time()

elapsed = (end - start) / 10

print(f"Query language lowerBin(): {(elapsed):3f} seconds.")

Around 162 milliseconds to process >600k rows of data. That's over THIRTY times faster. How is it that much faster?

- Calling the Python

lower_binfunction in a query string means that Java calls Python, which calls Java again. This happens numerous times, as it does this in chunks, and there are numerous chunks in >600k rows. - The Python code that gets called from the query string is limited by the GIL. Thus, concurrency goes to near-zero. When

lowerBinis called in the query string, it's Java being called from Java. Concurrency can occur in this case.

Those are the two biggest factors, and they make a massive difference.

Built-in query language methods

There are many built-in query language methods you can take advantage of. Your queries can greatly benefit from using them. These are the ones I use the most:

- Anything in DateTimeUtils

- Anything in the Numeric class

- Random number generators

Further reading

Interested in reading more? Check out the following guides:

Watch and see

Reach out

Got questions or comments? Reach out to us on Slack!