Machine learning techniques have an ever-increasing importance in healthcare. Some key applications include medical image classification, treatment recommendations, disease detection, and prediction. This blog discusses predicting seizures in epileptic patients through binary classification.

You don't need to be a neuroscience expert to develop a basic working prototype of a seizure prediction model. To classify electroencephalogram (EEG) signals we will use a long short-term memory (LSTM) recurrent neural network (RNN) with Deephaven.

Deephaven is ideally suited for this task - its images for AI/ML in Python make using TensorFlow easy (for more information, please see our guide). Besides, in real-world applications, EEG data is generated in the form of a stream, such as neural activity records from brain implants, sensors, or wearable devices. Deephaven's streaming tables are a natural choice to make real-time predictions.

Background

Seizures are like storms in the brain — sudden bursts of abnormal electrical activity that can cause disturbances in movements, behavior, feelings, and awareness. There is no regularity in their occurrence, so doctors have no way of telling people with epilepsy when the next seizure might happen - in 20 hours, in 20 days, or 20 weeks after a previous one. 25% of the patients with epilepsy are drug-resistant and have to live with the threat of a sudden seizure at any time.

For many years, neuroscientists thought seizures began abruptly, just a few seconds before clinical attacks. Recent research has shown that seizures are not random events and develop minutes to hours before clinical onset. There are 4 states of brain activity: interictal (between seizures), preictal (before seizure), ictal (seizure), and post-ictal (after seizures). Over the last few years, significant research has demonstrated the existence and accurate classification of the preictal brain state.

Dataset

The latest studies show that seizures can be forecast 24 hours in advance — and in some patients, up to three days prior. In this work, we will not be so ambitious. Instead, we will try to predict the risk of a seizure within 10-minute intervals. We will be using a dataset from the American Epilepsy Society Seizure Prediction Challenge on Kaggle. It is EEG data from the NeuroVista seizure advisory system implant.

Each epilepsy patient has their own specific pre-seizure signatures, so we will be using records of brain electrical activity only for one patient from the dataset for the sake of time. The goal of our experiment is to distinguish between 10-minute-long data clips covering an hour before a seizure (i.e., preictal clips), and 10-minute EEG clips with no oncoming seizures (interictal clips).

Load the data

Let's start by loading the EEG data:

For our patient, EEG data was recorded with 15 channels (15 electrodes) and a sampling rate of 5000 Hz. In each channel, a sampling frequency (5000 Hz) determines how many data samples represent 1 second of EEG data. The sampling frequency multiplied by the total measurement time per clip (~600 seconds in our example) determines the length of each time series (around 3,000,000).

Features

There are various signal processing methods to engineer features from the raw EEG data. The Kaggle competition winners used the power spectral band, the signal correlation between EEG channels and eigenvalue of the correlation matrix, Shannon's entropy, and many more.

In this blog, we want to keep it simple - we won't dive deep into complex signal processing theories and neuroscience interpretations; instead, we will only perform a 1d convolution on the raw measurements.

For our LSTM network, we want to use TensorFlow, which requires input as a tensor with the shape (N, seq_len, n_channels) where:

- N is the number of data points.

- seq_len is the sequence length for time-series.

- n_channels is the number of channels.

The problem we face here is that the raw data sequence is very long for the LSTM network. In our example, there are approximately 3,000,000 points in time. This is very long, and typical LSTM cells cannot be trained for such a long series. Therefore, we are going to use 1d convolutions with averages to reduce the number of points. This results in a shorter time series that we can use as an input to an LSTM network. Our approach is based on the code available on this GitHub repository:

Click to see the code!

After averaging, our data shape is (612, 2840, 15), which is an acceptable value a typical LSTM network can handle.

It is always a good idea to normalize the data:

Build the model

Finally, we are ready to build our RNN model for predictions:

Train the model

Now let's train our model with Deephaven tables. This requires a few additional functions:

Click to see the code!

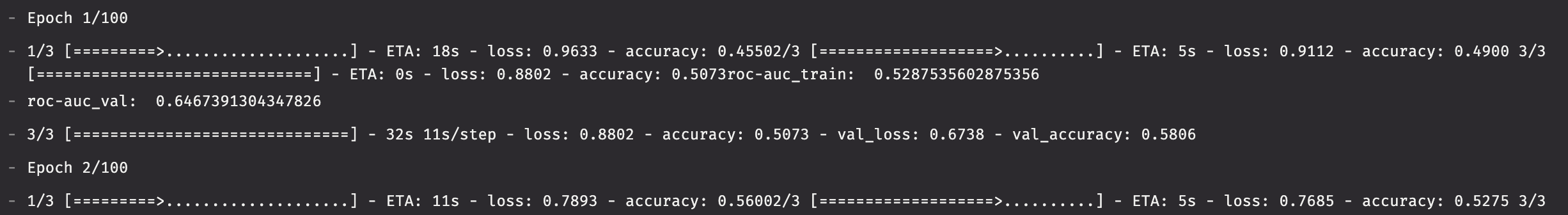

To evaluate our model, we calculated the area under the ROC curve (AUC) - the same metric that was used to judge submissions in the Kaggle Seizure Prediction Challenge. For our validation dataset, we got AUC = 0.8. Of course, it should ideally be closer to 1 for a good classifier. But our model is just a toy example we built with limited domain knowledge in neuroscience and without using complex signal processing procedures and feature engineering.

Real-time predictions

As mentioned before, one of Deephaven's biggest advantages is the ability to deal with numerous real-time data feeds. To simulate the real-time feed, we can use a TableReplayer:

Though we trained our model on the static dataset, Deephaven can use the streaming data source to perform real-time classification: