How to use Deephaven in a local development environment

Set up your IDE

Note

Download Example projects that depend on Deephaven are available for the Gradle and Maven build tools:

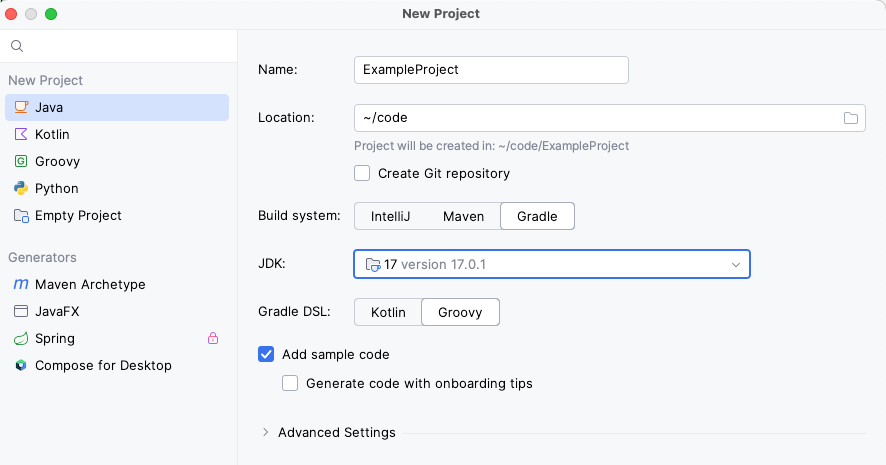

This example will use IntelliJ IDEA together with the Gradle build tool and show you how to create a project that uses Deephaven libraries.

Your new project will include a build.gradle file that looks something like this:

To add Deephaven libraries to your project, add the following to your dependencies:

This adds fundamental Core+ classes like Table and Database to your project, as well as tools to read CSV and Parquet files. Mockito will be used to mock the Database in unit tests.

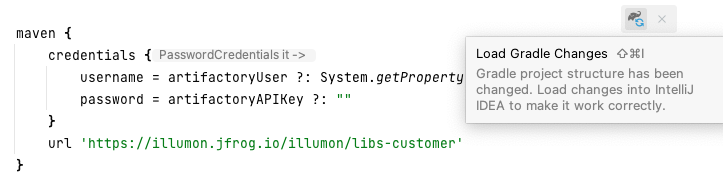

Next, add the following Maven repository with your credentials. artifactoryUser and artifactoryAPIKey

must be set in gradle.properties:

The Core+ Database module also requires the Confluent Maven repository:

Deephaven logs JVM internal stats that require the following JVM argument to allow access: --add-exports=java.management/sun.management=ALL-UNNAMED. Add this to your test task.

Your build.gradle file will now look something like:

Refresh the project, and Gradle will download the specified modules.

Local unit testing

It may be helpful to have some local test data you can use to test your query's correctness.

The following simple query calculates the average and mid prices of stocks for a given day:

It uses helper methods which have been written in Java so that they can be unit tested:

We need some test data. Ten rows from the LearnDeephaven.StockTrades dataset have been saved as src/test/resources/StockTrades.csv, and ten rows from the LearnDeephaven.StockQuotes dataset have been saved as src/test/resources/StockQuotes.parquet.

- The first step is to open an

ExecutionContextso that we can use table operations likewhereandupdateView. It is best practice to close theExecutionContextafter you are done with it. The@BeforeAlland@AfterAlltags are used so that we only need to do this once for all tests.

- Mocking the

Databaseto read test data in the form of CSVs or Parquet files is easier than creating a real Database instance. This example uses Mockito:

- Use the methods described in the Core Extract table values guide document to test your queries:

The full test class:

Connect to a remote DB

Client applications can connect to Deephaven server installations to run queries on a remote database. See the Core+ Java Client for more information.