Excessive initialization time

Excessive initialization time occurs when a Deephaven query takes unusually long to start up, potentially exceeding its configured initialization timeout. This problem can manifest as stalled queries, timeouts, or extreme delays before initial results appear, impacting both development workflows and production systems.

Diagnosing initialization bottlenecks is essential for maintaining responsive applications and efficient data processing pipelines. Long initialization times can frustrate users, waste computational resources, and potentially indicate serious underlying performance issues in your queries or data sources.

The approach to troubleshooting depends on whether the query eventually completes initialization or times out. If initialization completes, you can utilize the Performance Overview to identify problematic operations. For queries that time out, alternative diagnostic techniques are necessary, though the performance optimization steps follow the same pattern detailed in troubleshooting query performance.

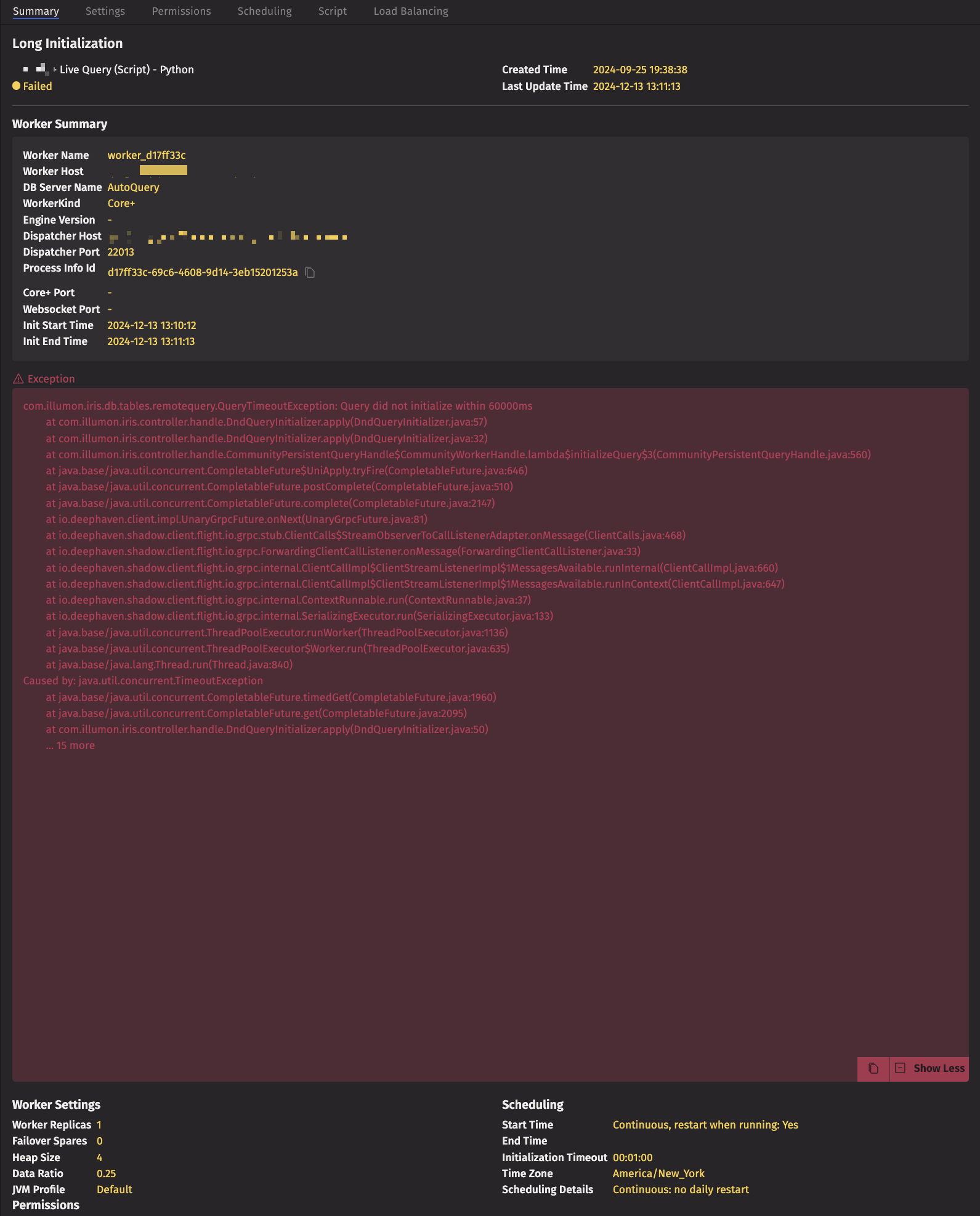

For example, the following screenshot from the Query Monitor shows a query that failed to initialize with a timeout:

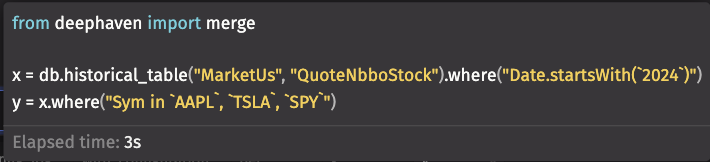

This query's script was the following:

From the error message on the Query Monitor, it is unclear what operation is causing the timeout. Even though this script is simple, there are several possibilities:

- Retrieving metadata about the table.

- The

wherefilter onSym. - The aggregation to count the number of rows for each

SymandDate. - The time for any single operation does not overwhelm the others, but the combination takes longer than expected.

The first step in diagnosing a Persistent Query timeout is to view the ProcessEventLog for that query. In this case, we can retrieve it as follows:

Note

The authenticated user is the user that authenticated to the system to initiate the operation. The effective user is the user that Deephaven enforces permission checks for.

A simple example is the "Operate as" login, where the user who logs in (via password, SAML, etc.) is the Authenticated User, and the user they are operating as is the Effective User.

By scrolling the log, you can find a full thread dump that the controller initiated before terminating the query. Searching for the DeephavenApiServer-Scheduler-Serial thread that is responsible for executing the query reveals the following stack trace (line numbers added for clarity):

- Lines 2-9 indicate that the process is reading data using Java I/O primitives.

- Lines 10-30 are the Deephaven I/O layer.

- Lines 30-44 indicate that the process is performing an aggregation.

This points towards the count as the likely culprit. This is a piece of evidence, but not definitive. The where filter could have taken most of the time, and the aggregation could simply be starting to execute. In some cases, it is reasonable to increase the execution timeout - the query may be slow but can still be completed when given sufficient time. In this case, if the timeout is increased, we can run Performance Overview on the query:

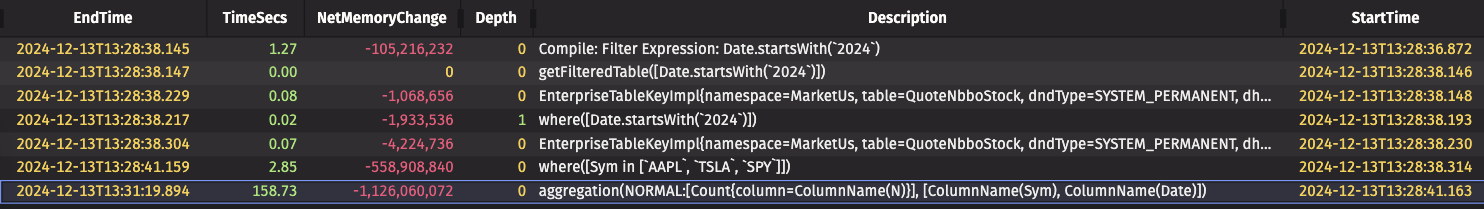

This yields the following QueryOperationPerformanceCoreV2 table:

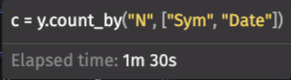

If you cannot wait for the query to completely initialize, one simple way to determine the slow operation is to add print statements before and after each operation and then monitor the ProcessEventLog. Another method to narrow down which part of the query is slow is to run snippets of the query one at a time in a Code Studio, using the elapsed time reported for each section of the query, showing that the count is the bulk of execution time:

If you have administrative access to the server, you may also cause a thread dump to be taken by sending Unix signal 3 to the worker process. You can monitor the execution of the DeephavenApiServer-Scheduler-Serial-1 thread to identify what kind of operation is currently executing. Similarly, you can attach a profiler or debugger.