S3 Table Storage

Amazon S3 (Simple Storage Service) is a scalable object storage service provided by AWS. It is commonly used for storing and retrieving large amounts of data, making it a suitable backend for Deephaven's table storage. This document outlines the steps required to configure and use S3 as a table storage backend for Deephaven, leveraging the Goofys tool to mount S3 buckets as a local filesystem on an AWS EC2 instance.

Warning

S3 performance may be significantly slower than other table storage backends. See the Performance section below for more information.

Configuration

To configure S3 as a table storage backend for Deephaven, you need to set up and mount the S3 bucket using Goofys. This section provides step-by-step instructions on installing and configuring Goofys on an AWS EC2 instance, ensuring that your S3 storage is properly mounted and accessible for Deephaven to use as a backend. Follow the steps below to get started.

Goofys

Goofys mounts an S3 store and exposes it as a mounted user-space filesystem on an AWS EC2 host. Follow the instructions below to install Goofys on your EC2 Linux host.

Install Fuse:

Confirm that the fuse libs are installed:

Download the Goofys binary:

Confirm that the Goofys binary is installed:

Goofys mounts as a specific user and group, and these cannot be changed once mounted because file mode, owner, and group are not supported POSIX behaviors. To find the UID and GID for the dbquery user, use the following command:

This will output something similar to:

The UID is 9001 and the GID is 9003.

To mount the S3 store as a normal user for testing, create a directory that will be backed by S3 and mount it as the dbquery user:

To create a permanent mount, see the Goofys Documentation for details on adding the mount to /etc/fstab.

Deephaven configuration

To use the Goofys mount as a table storage backend, see the Filesystem data layout page for details on linking the storage into the Database root directory.

Performance

Tests were conducted using Goofys to mount an S3 store and expose it as a user-space filesystem on an AWS EC2 host, comparing it to a similar NFS-mounted store. As expected, queries run on S3/Goofys data took longer than their NFS counterparts.

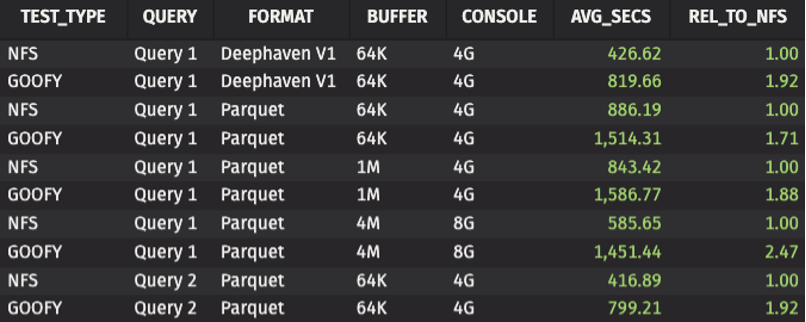

The chart below summarizes the performance of queries run on data exposed via Goofys, relative to similar queries on data exposed via NFS. The REL_TO_NFS column is a multiplier that shows how Goofys performance compares to its NFS counterpart, with the NFS value always being 1. For instance, the Goofys query on Deephaven V1 format data has a relative value of 1.92, indicating it took nearly twice as long. Generally, queries on Goofys ran close to twice as long as those on NFS. Increasing the data buffer size to 4M and console to 8G widened the disparity, with Goofys queries running approximately 2.5 times longer than NFS.

The queries used in the test follow.

Note

These queries are from an older version of Deephaven and may not run in the current version without modification.