Deephaven Community Core Quickstart

Deephaven Community Core can be installed with Docker or the production application. If you are familiar with Docker or already have it installed, the single-line Docker command is an easy way to get started with Deephaven. If you wish to not use Docker, use the production application.

1. Install and Launch Deephaven

With Docker

Install and launch Deephaven via Docker with a one-line command:

docker run --rm --name deephaven -p 10000:10000 --env START_OPTS="-Dauthentication.psk=PASSWORD" ghcr.io/deephaven/server-slim:latest

Caution

Replace "PASSWORD" with a more secure passkey to keep your session safe.

For additional configuration options, see the install guide for Docker.

With Native Deephaven

Download the latest Deephaven server-jetty-<version>.tar from the Latest Release Assets using your browser or the command line, unpack the tar file, and start Deephaven.

wget https://github.com/deephaven/deephaven-core/releases/download/v0.33.3/server-jetty-0.33.3.tar

tar xvf server-jetty-0.33.3.tar

START_OPTS="-Dauthentication.psk=PASSWORD -Ddeephaven.console.type=groovy" server-jetty-0.33.3/bin/start

2. The Deephaven IDE

Navigate to http://localhost:10000/ and enter your password in the token field:

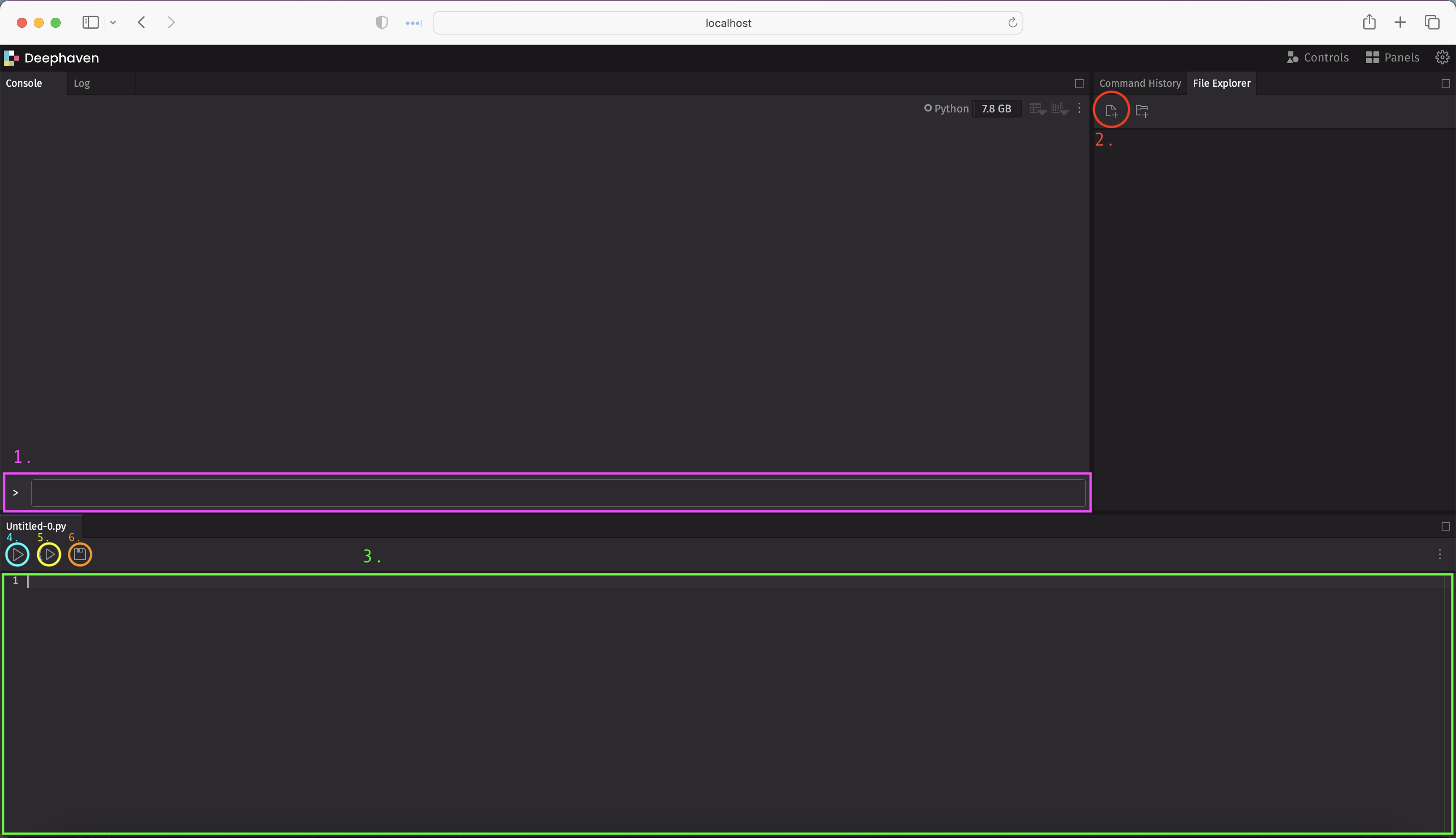

You're ready to go! The Deephaven IDE is a fully-featured scripting IDE. Here's a brief overview of some of its basic functionalities.

-

Write and execute commands

Use this console to write and execute Groovy and Deephaven commands.

-

Create new notebooks

Click this button to create new notebooks where you can write scripts.

-

Edit active notebook

Edit the currently active notebook.

-

Run entire notebook

Click this button to execute all of the code in the active notebook, from top to bottom.

-

Run selected code

Click this button to run only the selected code in the active notebook.

-

Save your work

Save your work in the active notebook. Do this often!

Now that you have Deephaven installed and open, the rest of this guide will briefly highlight some key features of using Deephaven.

For a more exhaustive introduction to Deephaven and an in-depth exploration of our design principles and APIs, check out the Crash Course series.

3. Import static and streaming data

Deephaven empowers users to wrangle static and streaming data with ease. It supports ingesting data from CSV files, Parquet files, and Kafka streams.

Load a CSV

Run the command below inside a Deephaven console to ingest a million-row CSV of crypto trades. All you need is a path or URL for the data:

import static io.deephaven.csv.CsvTools.readCsv

cryptoFromCsv = readCsv(

"https://media.githubusercontent.com/media/deephaven/examples/main/CryptoCurrencyHistory/CSV/CryptoTrades_20210922.csv"

)

The table widget now in view is highly interactive:

- Click on a table and press Ctrl + F (Windows) or ⌘F (Mac) to open quick filters.

- Click the funnel icon in the filter field to create sophisticated filters or use auto-filter UI features.

- Hover over column headers to see data types.

- Right-click headers to access more options, like adding or changing sorts.

- Click the Table Options hamburger menu at right to plot from the UI, create and manage columns, and download CSVs.

Replay Historical Data

Ingesting real-time data is one of Deephaven's superpowers, and you can learn more about supported formats from the links at the end of this guide. However, streaming pipelines can be complicated to set up and are outside the scope of this discussion. For a streaming data example, we'll use Deephaven's Table Replayer to replay historical cryptocurrency data back in real time.

The following code takes fake historical crypto trade data from a CSV file and replays it in real time based on timestamps. This is only one of multiple ways to create real-time data in just a few lines of code. Replaying historical data is a great way to test real-time algorithms before deployment into production.

import static io.deephaven.csv.CsvTools.readCsv

import io.deephaven.engine.table.impl.replay.Replayer

fakeCryptoData = readCsv(

"https://media.githubusercontent.com/media/deephaven/examples/main/CryptoCurrencyHistory/CSV/FakeCryptoTrades_20230209.csv")

start = parseInstant("2023-02-09T12:09:18 ET")

end = parseInstant("2023-02-09T12:58:09 ET")

replayer = new Replayer(start, end)

cryptoStreaming = replayer.replay(fakeCryptoData, "Timestamp")

replayer.start()

4. Working with Deephaven Tables

In Deephaven, static and dynamic data are represented as tables. New tables can be derived from parent tables, and data efficiently flows from parents to their dependents. See the concept guide on the table update model if you're interested in what's under the hood.

Deephaven represents data transformations as operations on tables. This is a familiar paradigm for data scientists using Pandas, Polars, R, Matlab and more. Deephaven's table operations are special - they are indifferent to whether the underlying data sources are static or streaming! This means that code written for static data will work seamlessly on live data.

There are a ton of table operations to cover, so we'll keep it short and give you the highlights.

Manipulating data

First, reverse the ticking table with reverse so that the newest data appears at the top:

cryptoStreamingRev = cryptoStreaming.reverse()

Tip

Many table operations can also be done from the UI. For example, right-click on a column header in the UI and choose Reverse Table.

Add a column with update:

cryptoStreamingRev = cryptoStreamingRev.update("TransactionTotal = Price * Size")

Use select or view to pick out particular columns:

cryptoStreamingPrices = cryptoStreamingRev.view("Instrument", "Price")

Remove columns with dropColumns:

cryptoStreamingRev = cryptoStreamingRev.dropColumns("TransactionTotal")

Next, Deephaven offers many operations for filtering tables. These include where, whereIn, whereNotIn, and more.

The following code uses where to filter for only Bitcoin transactions, and then for Bitcoin and Ethereum transactions:

btcStreaming = cryptoStreamingRev.where("Instrument == `BTC/USD`")

etcBtcStreaming = cryptoStreamingRev.where(

"Instrument in `BTC/USD`, `ETH/USD`"

)

Aggregating data

Deephaven's dedicated aggregations suite provides a number of table operations that enable efficient column-wise aggregations. These operations also support aggregations by group.

Use countBy to count the number of transactions from each exchange:

exchangeCount = cryptoStreaming.countBy("Count", "Exchange")

Then, get the average price for each instrument with avgBy:

instrumentAvg = cryptoStreaming.view("Instrument", "Price").avgBy("Instrument")

Find the largest transaction per instrument with maxBy:

maxTransaction = (

cryptoStreaming.update("TransactionTotal = Price * Size")

.view("Instrument", "TransactionTotal")

.maxBy("Instrument")

)

While dedicated aggregations are powerful, they only enable you to perform one aggregation at a time. However, you often need to perform multiple aggregations on the same data. For this, Deephaven provides the aggBy table operation and the io.deephaven.api.agg.Aggregation Java API.

First, use aggBy to compute the mean and standard deviation of each instrument's price, grouped by exchange:

import static io.deephaven.api.agg.Aggregation.AggAvg

import static io.deephaven.api.agg.Aggregation.AggStd

summaryPrices = cryptoStreaming.aggBy(

[AggAvg("AvgPrice=Price"), AggStd("StdPrice=Price")],

"Instrument", "Exchange",

).sort("Instrument", "Exchange")

Then, add a column containing the coefficient of variation for each instrument, measuring the relative risk of each:

summaryPrices = summaryPrices.update("PctVariation = 100 * StdPrice / AvgPrice")

Finally, create a minute-by-minute Open-High-Low-Close table using the lowerBin built-in function along with AggFirst, AggMax, AggMin, and AggLast:

import static io.deephaven.api.agg.Aggregation.AggFirst

import static io.deephaven.api.agg.Aggregation.AggMax

import static io.deephaven.api.agg.Aggregation.AggMin

import static io.deephaven.api.agg.Aggregation.AggLast

ohlcByMinute = (

cryptoStreaming.update("BinnedTimestamp = lowerBin(Timestamp, MINUTE)")

.aggBy(

[

AggFirst("Open=Price"),

AggMax("High=Price"),

AggMin("Low=Price"),

AggLast("Close=Price")

],

"Instrument", "BinnedTimestamp",

)

.sort("Instrument", "BinnedTimestamp")

)

You may want to perform window-based calculations, compute moving or cumulative statistics, or look at pair-wise differences. Deephaven's updateBy table operation is the right tools for the job.

Compute the moving average and standard deviation of each instrument's price using rollingAvg and rollingStd:

import java.time.Duration

instrumentRollingStats = cryptoStreaming.updateBy(

[

RollingAvg("Timestamp", Duration.parse("PT30s"), "AvgPrice30Sec=Price"),

RollingAvg("Timestamp", Duration.parse("PT5m"), "AvgPrice5Min=Price"),

RollingStd("Timestamp", Duration.parse("PT30s"), "StdPrice30Sec=Price"),

RollingStd("Timestamp", Duration.parse("PT5m"), "StdPrice5Min=Price")

],

"Instrument"

).reverse()

These statistics can be used to determine "extreme" instrument prices, where the instrument's price is significantly higher or lower than the average of the prices preceding it in the window:

instrumentExtremity = instrumentRollingStats.update(

"Z30Sec = (Price - AvgPrice30Sec) / StdPrice30Sec",

"Z5Min = (Price - AvgPrice5Min) / StdPrice5Min",

"Extreme30Sec = Math.abs(Z30Sec) > 1.645 ? true : false",

"Extreme5Min = Math.abs(Z5Min) > 1.645 ? true : false"

).view(

"Timestamp",

"Instrument",

"Exchange",

"Price",

"Size",

"Extreme30Sec",

"Extreme5Min"

)

There's a lot more to updateBy. See the user guide for more information.

Combining tables

Combining datasets can often yield powerful insights. Deephaven offers two primary ways to combine tables - the merge and join operations.

The merge operation stacks tables on top of one-another. This is ideal when several tables have the same schema. They can be static, ticking, or a mix of both:

combinedCrypto = merge(fakeCryptoData, cryptoStreaming).sort("Timestamp")

The ubiquitous join operation is used to combine tables based on columns that they have in common. Deephaven offers many variants of this operation such as join, naturalJoin, exactJoin, and many more.

For example, read in an older dataset containing price data on the same coins from the same exchanges. Then, use join to combine the aggregated prices to see how current prices compare to those in the past:

moreCrypto = readCsv(

"https://media.githubusercontent.com/media/deephaven/examples/main/CryptoCurrencyHistory/CSV/CryptoTrades_20210922.csv"

)

moreSummaryPrices = moreCrypto.aggBy(

[AggAvg("AvgPrice=Price"), AggStd("StdPrice=Price")],

"Instrument", "Exchange"

).sort("Instrument", "Exchange")

priceComparison = (

summaryPrices.dropColumns("PctVariation")

.renameColumns("AvgPriceFeb2023=AvgPrice", "StdPriceFeb2023=StdPrice")

.join(

moreSummaryPrices,

"Instrument, Exchange",

"AvgPriceSep2021=AvgPrice, StdPriceSep2021=StdPrice"

)

)

In many real-time data applications, data needs to be combined based on timestamps. Traditional join operations often fail this task, as they require exact matches in both datasets. To remedy this, Deephaven provides time series joins, such as aj and raj, that can join tables on timestamps with approximate matches.

Here's an example where aj is used to find the Ethereum price at or immediately preceding a Bitcoin price:

cryptoBtc = cryptoStreaming.where("Instrument = `BTC/USD`")

cryptoEth = cryptoStreaming.where("Instrument = `ETH/USD`")

timeSeriesJoin = cryptoBtc.view("Timestamp", "Price")

.aj(cryptoEth, "Timestamp", "EthTime = Timestamp, EthPrice = Price")

.renameColumns("BtcTime = Timestamp", "BtcPrice = Price")

To learn more about our join methods, see the guides on exact and relational joins and time series and range joins.

5. Plot data via query or the UI

Deephaven has a rich plotting API that supports updating, real-time plots. It can be called programmatically:

btcData = instrumentRollingStats.where("Instrument == `BTC/USD`").reverse()

btcPlot = figure()

.plot("Bitcoin Prices", btcData, x="Timestamp", y="Price")

.plot("Rolling Average", btcData, x="Timestamp", y="AvgPrice30Sec")

.show()

Or with the web UI:

6. Export data to popular formats

It's easy to export your data out of Deephaven to popular open formats.

To export a table to a CSV file, use the writeCsv method with the table name and the location to which you want to save the file. If you are using Docker, see managing Docker volumes for more information on how to save files to your local machine.

import static io.deephaven.csv.CsvTools.writeCsv

writeCsv(instrumentRollingStats, "/data/crypto_prices_stats.csv")

If the table is dynamically updating, Deephaven will automatically snapshot the data before writing it to the file.

Similarly, for Parquet:

import static io.deephaven.parquet.table.ParquetTools.writeTable

writeTable(instrumentRollingStats, "/data/crypto_prices_stats.parquet")

7. What to do next

Now that you've imported data, created tables, and manipulated static and real-time data, we suggest heading to our user guide. There, you can explore anything from complex data aggregations to detailed performance comparisons and more.

- Import CSV files

- Read Parquet files

- Write Parquet files

- Connect to a Kafka stream

- Create and organize notebooks using the File Explorer

- Create plots without writing queries using the Chart Builder

- Filter your data without writing queries

- Perform combined aggregations

- Group data

- Joins: Exact and relational

- Joins: Time series and range joins

- Select, view, and update data

- Use filters

- Write a Groovy function