Use Numba in Deephaven queries

This guide will show you how to use Numba in your Python queries in Deephaven.

Numba is an open-source just-in-time (JIT) compiler for Python. It can be used to translate portions of Python code into optimized machine code using LLVM. The use of Numba can make your queries faster and more responsive.

What is just-in-time (JIT) compilation?

JIT compiles code into optimized machine code at runtime. When using Numba, you can specify which functions and blocks of code you want to be compiled at runtime. Compiling code blocks into optimized machine code adds some overhead, but subsequent function calls can be much faster. Thus, JIT can be powerful when used on functions that are complex, large, or will be used many times.

Caution

JIT does not guarantee faster code. Sometimes, code cannot be optimized well by Numba and may actually run slower.

Usage

Numba uses decorators to specify which blocks of code to JIT compile. Common Numba decorators include @jit, @vectorize, and @guvectorize. See Numba's documentation for more details.

In the following example, @jit and @vectorize are used to JIT compile function_one and function_two.

Lazy compilation

If the decorator is used without a function signature, Numba will infer the argument types at call time and generate optimized code based upon the inferred types. Numba will also compile separate specializations for different input types. The compilation is deferred to the first function call. This is called lazy compilation.

The example below uses lazy compilation on the function func. When the code is first run, the function is compiled to optimized machine code, which takes extra overhead and results in longer execution time. On the second function call, Python uses the already optimized machine code, which results in very fast execution.

Eager compilation

If the decorator is used with a function signature, Numba will compile the function when the function is defined. This is called eager compilation

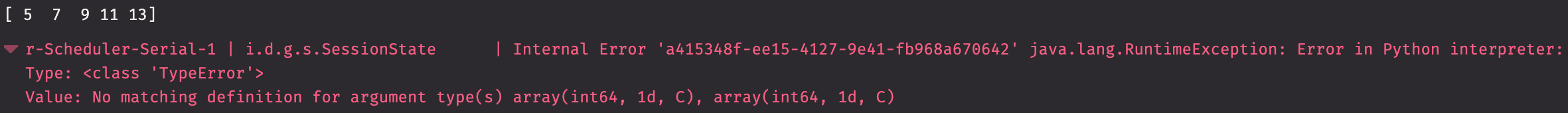

The example below uses eager compilation on the function func by specifying one or more function signatures. These function signatures denote the allowed input and output data types to and from the function. With eager compilation, the code is compiled into optimized machine code at the function definition. When the code is run the first time, it has already been compiled into optimized machine code, so it's just as fast as the second function call. If the compiled function is used with a data type not specified in the function signature, an error will occur.

Caution

Eager compilation creates functions that only support specific function signatures. If these functions are applied to arguments of mismatched types, an error will occur.

@jit vs @vectorize

We've seen the @jit and @vectorize decorators used to optimize various functions. So far, they've been used without an explanation of how they differ. So, what is different about each decorator?

@jitis a general-purpose decorator that can optimize a wide variety of different functions.@vectorizeis a decorator meant to allow functions that operate on arrays to be written as if they operate on scalars.

This can be visualized with a simple example:

Functions created using @vectorize can be applied to arrays. However, attempting to do the same with @jit results in an error.

@vectorize vs @guvectorize

The @vectorize decorator allows Python functions taking scalar input arguments to be used as NumPy ufuncs. Creating these ufuncs with NumPy alone is a tricky process, but @vectorize makes it significantly easier. It allows users to write functions as if they're working on scalars, even though they're working on arrays.

The @guvectorize decorator takes the concepts from @vectorize one step further. It allows users to write ufuncs that work on an arbitrary number of elements of input arrays, as well as take and return arrays of differing dimensions. Functions decorated with @guvectorize don't return their result value; rather, thay take it as an array input argument, which will be filled in by the function itself.

The following example shows a @guvectorize decorated function.

The @guvectorize decorator is much different than the previous @vectorize decorators used in this document.

- The first part,

[(int64[:], int64, int64[:])], is largely similar. It tells Numba that the function takes three inputs: an array, a scalar, and another array, all of Numba'sint64data type. - The second part (after the comma), tells NumPy that the function takes an n-element one dimensional array (

(n)) and a scalar (()) as input, and returns an n-element one dimensional array ((n)).

Examples

NumPy matrix

The following example uses @jit to calculate the element-wise sum of a 250x250 matrix. This is a total of 62,500 additions.

Here, the nopython=True option is used. This option produces faster, primitive-optimized code that does not need the Python interpreter to execute. Without this flag, Numba will fall back to the slower object mode in some circumstances.

This example looks at three cases:

- A regular function without JIT.

- A JIT function that needs compilation.

- A JIT function that is already compiled.

The first time the JIT-enabled function is run on a matrix of integers, it's almost ten times slower than the standard function. However, after compilation, the JIT-enabled function is almost two hundred times faster!

Using Deephaven tables

To show how the performance of @jit and @vectorize differ when applied to Deephaven tables, we will create identical functions that use these decorators. We then measure the performance of creating new columns in a 625,000 row table when using the functions.

The use of @jit with functions operating on Deephaven tables results in a very small performance increase over its standard counterparts. This performance increase is small enough to make the additional overhead of compiling the function into optimized machine code not worth it.

The use of @vectorize with functions operating on Deephaven tables results in a large performance increase over its standard counterparts. This performance increase is large enough to warrant the additional overhead associated with compiling the function into optimized machine code.

@guvectorize

The following example uses the @guvectorize decorator on the function g, which is used in an update operation.